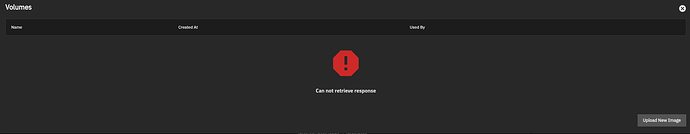

I think storage volumes will work fine for shared data for your Docker applications. There are drawbacks, like not being able to drill down the tree to access it easily. Once it’s imported into Incus, it goes dark in the UI and you can’t cd down the path to the files. Since you can’t see the volumes, you can’t mount them in the Web UI either. It should essentially work similarly to host mount points.

On the other hand, it’s managed within Incus, so permissions should work, if we get an image running with the Incus Web UI, it would probably be easy to manage the storage and volumes within Incus easily since it’s using the ZFS driver to handle datasets, volumes, snapshots, etc.

This was just a quick setup and test and there are probably some best practices I’m likely missing as well. This should persist as it’s being created in the default profile. Thoughts, suggestions?

Create volume:

incus storage volume create default docker size=50GiB zfs.block_mode=true block.filesystem=ext4

Default storage info:

incus storage show default

config:

source: sol/.ix-virt

zfs.pool_name: sol/.ix-virt

description: ""

name: default

driver: zfs

used_by:

- /1.0/images/d500c0445c2514fc514add9df99e433e208e3d4102fed497c807f24ab5a09146

- /1.0/instances/docker1

- /1.0/instances/docker2

- /1.0/profiles/default

- /1.0/storage-pools/default/volumes/custom/docker

status: Created

locations:

- none

Volume info:

incus storage volume show default docker

config:

block.filesystem: ext4

block.mount_options: discard

size: 50GiB

volatile.idmap.last: '[{"Isuid":true,"Isgid":false,"Hostid":2147000001,"Nsid":0,"Maprange":458752},{"Isuid":false,"Isgid":true,"Hostid":2147000001,"Nsid":0,"Maprange":458752}]'

volatile.idmap.next: '[{"Isuid":true,"Isgid":false,"Hostid":2147000001,"Nsid":0,"Maprange":458752},{"Isuid":false,"Isgid":true,"Hostid":2147000001,"Nsid":0,"Maprange":458752}]'

zfs.block_mode: "true"

description: ""

name: docker

type: custom

used_by:

- /1.0/instances/docker1

- /1.0/instances/docker2

location: none

content_type: filesystem

project: default

created_at: 2025-02-25T20:18:29.231566987Z

ZFS datasets:

zfs list|grep ^sol/.ix-virt

sol/.ix-virt 1.88G 1.02T 96K legacy

sol/.ix-virt/buckets 96K 1.02T 96K legacy

sol/.ix-virt/containers 1.38G 1.02T 96K legacy

sol/.ix-virt/containers/docker1 708M 1.02T 960M legacy

sol/.ix-virt/containers/docker2 708M 1.02T 960M legacy

sol/.ix-virt/custom 1.84M 1.02T 96K legacy

sol/.ix-virt/custom/default_docker 1.75M 1.02T 1.75M -

sol/.ix-virt/deleted 255M 1.02T 96K legacy

sol/.ix-virt/deleted/buckets 96K 1.02T 96K legacy

sol/.ix-virt/deleted/containers 96K 1.02T 96K legacy

sol/.ix-virt/deleted/custom 96K 1.02T 96K legacy

sol/.ix-virt/deleted/images 254M 1.02T 96K legacy

sol/.ix-virt/deleted/images/d3d195e18e3f6ad0ec8b8c69adfa46e46074410e10a214173536f37c18c33e92 254M 1.02T 254M legacy

sol/.ix-virt/deleted/virtual-machines 96K 1.02T 96K legacy

sol/.ix-virt/images 254M 1.02T 96K legacy

sol/.ix-virt/images/d500c0445c2514fc514add9df99e433e208e3d4102fed497c807f24ab5a09146 254M 1.02T 254M legacy

sol/.ix-virt/virtual-machines

Attach to instances:

incus config device add docker1 docker disk source=docker pool=default path=/mnt/foo

incus config device add docker2 docker disk source=docker pool=default path=/mnt/foo

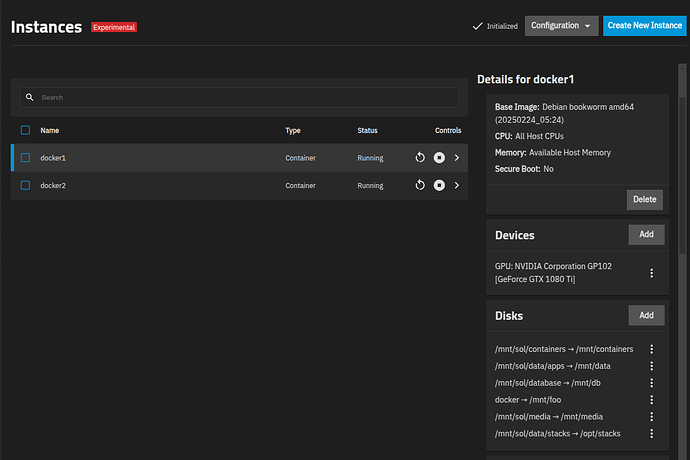

Instance configuration:

volatile.idmap.base: "0"

volatile.idmap.current: '[{"Isuid":true,"Isgid":false,"Hostid":2147000001,"Nsid":0,"Maprange":458752},{"Isuid":false,"Isgid":true,"Hostid":2147000001,"Nsid":0,"Maprange":458752}]'

volatile.idmap.next: '[{"Isuid":true,"Isgid":false,"Hostid":2147000001,"Nsid":0,"Maprange":458752},{"Isuid":false,"Isgid":true,"Hostid":2147000001,"Nsid":0,"Maprange":458752}]'

docker:

path: /mnt/foo

pool: default

source: docker

type: disk

Touch files:

root@docker1:~# cd /mnt/foo && touch docker1

root@docker2:~# cd /mnt/foo && touch docker2

List files in volume:

ls -l /mnt/foo/

total 16

-rw-r--r-- 1 root root 0 Feb 25 15:52 docker1

-rw-r--r-- 1 root root 0 Feb 25 15:52 docker2

drwx------ 2 root root 16384 Feb 25 15:18 lost+found

Disk usage:

df -h /mnt/foo

Filesystem Size Used Avail Use% Mounted on

/dev/zvol/sol/.ix-virt/custom/default_docker 49G 24K 47G 1% /mnt/foo

Screenshot of volume added in UI:

Also, when adding a volume this way, it breaks the Web UI when attempting to upload images.