No they are not Integrated graphics cards, all 3 are discrete graphics cards. The sequence I used was the following:

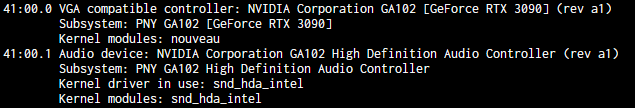

1 - Added and plugged in NVIDIA 1060 & NVIDIA 3090 into PCIE slots

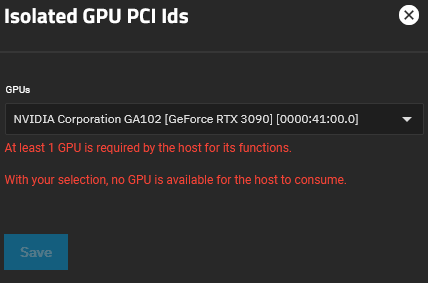

2 - Installed drivers from GUI for both 1060 and 3090 and both were working for transcoding for plex, and selectable from other apps as well.

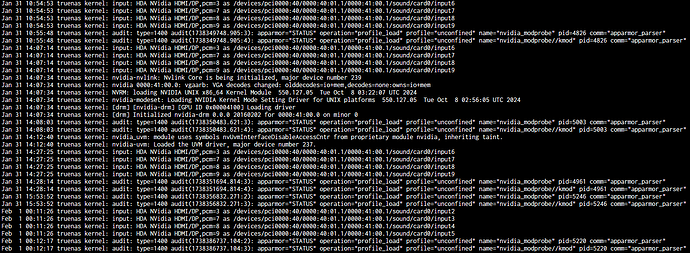

3 - Rebooted system and nvidia drivers stopped working.

4 - Uninstalled drivers via GUI and reinstalled the drivers again.

5 - Tried rebooting a few times no change, and reinstalling drivers and rebooting again in between.

6 - Tried unplugging power for one of the GPUs and turning on the system to see if it would work, I alternated which GPU was provided power, still did not work.

7 - Reinstalled boot-pool (I think with both GPUs powered on? Or maybe only 1?) and the drivers were working again and selectable in docker apps again (although to use the GPU in the app had to remove and reinstall the app).

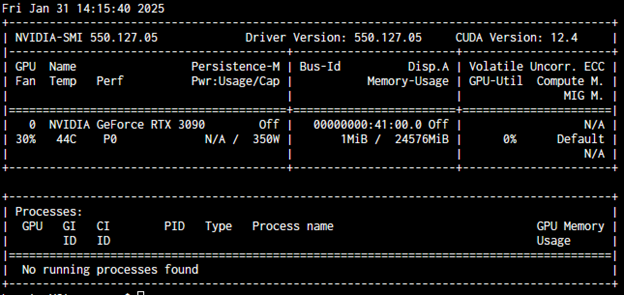

8 - To make sure rebooting isn’t what was causing the issue, I rebooted the system, and immediately ran nvidia-smi and got the error again, NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

9 - At this point I practically gave up, but I had one more GPU I could try in case it had something to do with the GPUs or something (unlikely but whatever, worth a try). I powered of the TrueNAS system and removed and replaced the 3090 with a 3080 from my gaming desktop, turned on the system, and ran nvidia-smi again, but still was treated to the same error.

10 - Powered off the machine and unplugged all GPUs (and just left them in their PCIE slots) to save power since they weren’t doing anything anyways, and just used the system without a GPU.

11 - Then this week I saw the change log about v24.10.2 potentially fixing the issue, so after power off TrueNAS, I replaced the 3080 with the original 3090. Plugged in both 3090 and 1060 and ran the upgrade to v24.10.2, ran nvidia-smi and was disappointed again.

12 - Tried uninstalling the GPUs and reinstalling again, didn’t make a difference, tried rebooting a few times, also made no difference.

Note: When running the Install NVIDIA Drivers the check boxes selected are the “stable” and “community” check boxes.