I note you are running as admin@TrueNAS which is a user account signified by the $ rather than a root account in my case which is signified by the # and I don’t know whether that will make a difference for you.

Here’s what I did and what I presumed:

(1) I tried a lot of things of my own and tested by trying Frigate using “pure” docker. It didn’t work so I raised the “Nvidia container toolkit” feature request, believing it to be necessary for running Frigate.

(2) @dasunsrule32 replied and said said

This is already installed on the TNCE host by default

which I now presume probably means the efforts I expended in (1) above were unnecessary. I then followed the instructions posted, by launching a terminal via an SSH session into TrueNAS and then trying:

root@truenas[~]# nvidia-container-cli list | grep -vFf <(nvidia-container-cli list --libraries)

which responded as per the post with

/dev/nvidiactl

/dev/nvidia-uvm

/dev/nvidia-uvm-tools

/dev/nvidia-modeset

/dev/nvidia0

/usr/bin/nvidia-smi

/usr/bin/nvidia-debugdump

/usr/bin/nvidia-persistenced

/usr/bin/nvidia-cuda-mps-control

/usr/bin/nvidia-cuda-mps-server

/lib/firmware/nvidia/550.127.05/gsp_ga10x.bin

/lib/firmware/nvidia/550.127.05/gsp_tu10x.bin

root@truenas[~]#

so then I followed the next command which is

# nvidia-container-cli -V

which also correctly responded, with

cli-version: 1.17.5

lib-version: 1.17.5

build date: 2025-03-07T15:46+00:00

build revision: f23e5e55ea27b3680aef363436d4bcf7659e0bfc

build compiler: x86_64-linux-gnu-gcc-7 7.5.0

build platform: x86_64

build flags: -D_GNU_SOURCE -D_FORTIFY_SOURCE=2 -DNDEBUG -std=gnu11 -O2 -g -fdata-sections -ffunction-sections -fplan9-extensions -fstack-protector -fno-strict-aliasing -fvisibility=hidden -Wall -Wextra -Wcast-align -Wpointer-arith -Wmissing-prototypes -Wnonnull -Wwrite-strings -Wlogical-op -Wformat=2 -Wmissing-format-attribute -Winit-self -Wshadow -Wstrict-prototypes -Wunreachable-code -Wconversion -Wsign-conversion -Wno-unknown-warning-option -Wno-format-extra-args -Wno-gnu-alignof-expression -Wl,-zrelro -Wl,-znow -Wl,-zdefs -Wl,--gc-sections

root@truenas[~]#

which showed that the container toolkit is properly installed and working. I confirmed things further, by doing a docker service restart

# systemctl restart docker

and then I tried

# nvidia-ctk runtime configure --runtime=docker

which resulted in

{

"data-root": "/mnt/.ix-apps/docker",

"default-address-pools": [

{

"base": "172.17.0.0/12",

"size": 24

}

],

"default-runtime": "nvidia",

"exec-opts": [

"native.cgroupdriver=cgroupfs"

],

"iptables": true,

"runtimes": {

"nvidia": {

"args": [],

"path": "nvidia-container-runtime"

}

},

"storage-driver": "overlay2"

}#

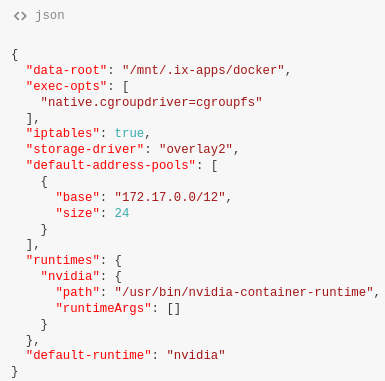

and finally I did

cat /etc/docker/daemon.json

which showed me

{"data-root": "/mnt/.ix-apps/docker", "exec-opts": ["native.cgroupdriver=cgroupfs"], "iptables": true, "storage-driver": "overlay2", "default-address-pools": [{"base": "172.17.0.0/12", "size": 24}], "runtimes": {"nvidia": {"path": "/usr/bin/nvidia-container-runtime", "runtimeArgs": []}}, "default-runtime": "nvidia"}#

which all looked similar to the above.

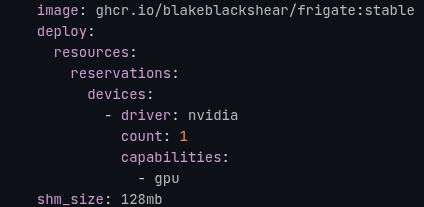

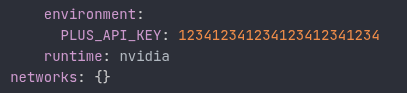

At that point I tried Frigate again via a docker compose and got the same errors, and caused myself trouble with the existing Frigate app, so I stopped experimenting for the time being (because I don’t want to cause myself even more work for now!).

Conclusion: the Nvidia container toolkit is indeed installed and working, but I am doing something else wrong (or there is a different problem I’m not yet aware of).

So do I need to make a separate container with the nvidia toolkit? and then link the frigate container to use it?

No. I wondered if that would be the case but several searches and a chat with AI made me certain that the docker system within TrueNAS now has the Nvidia container toolkit available to it, and hence any containers you create will somehow automatically get access to the toolkit.

Additionally:

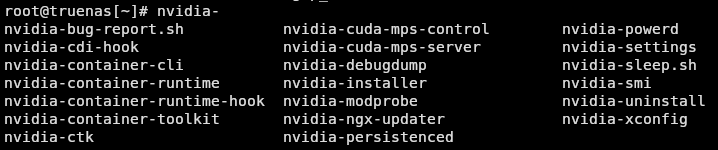

root@truenas[~]# nvidia-container-cli list should then list all the nvidia tools and shared objects (libraries of some sort) which demonstrates that the container toolkit is present and working. In fact, typing nvidia and pressing “tab” will show lots of options, for example