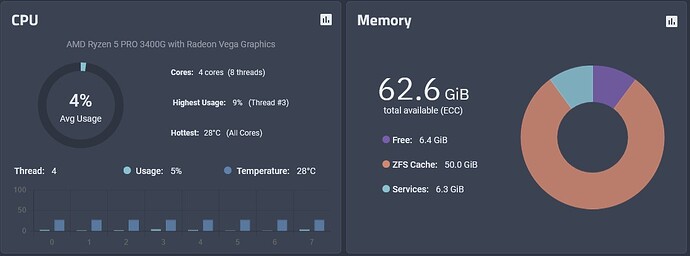

16GB Core system, with 42TiB of disk (4x16TB raidz). I run a few small jails but moistly its an SMB server for my home, both media and files.

This system was built in August 2016, and the DDR4 ECC memory cost me $400. A short while later, and the memory cost was DOUBLED for a while. It is currently running Dragonfish-24.04.2.3.

My TNS for a few years was an VMware ESX VM and ran on desktop platform. At that time this “desktop server” had 128GB of non-ECC DDR4 RAM installed and TNS VM had 64GB assigned.

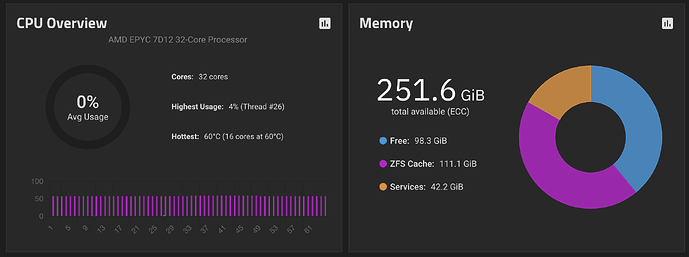

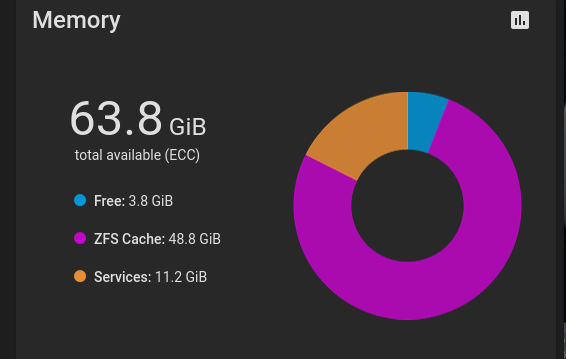

Recently I switched to a server board with EPYC plus I kicked now Broadcom’s ESX you know into what. The new platform has 192GB of ECC DDR4 installed, runs Proxmox and TNS VM has 96GB’s of RAM assigned.

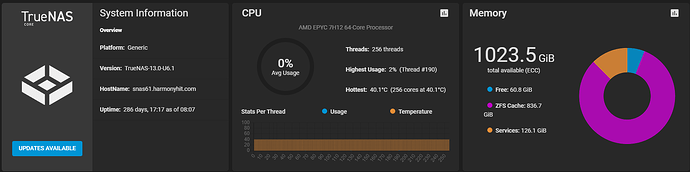

A “small” project from a while back. Also home to 24 x 30.72TB Micron NVME drives and 4 x 100Gbe NICs.

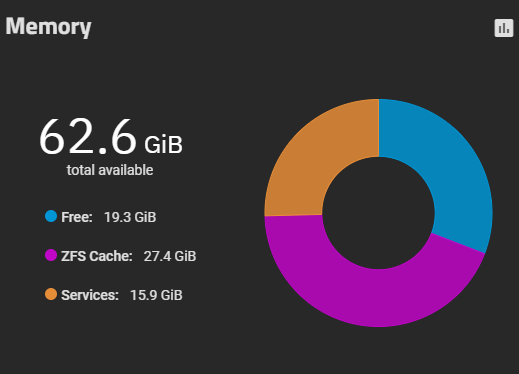

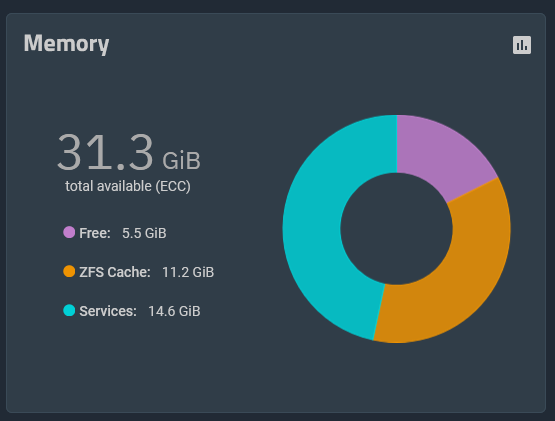

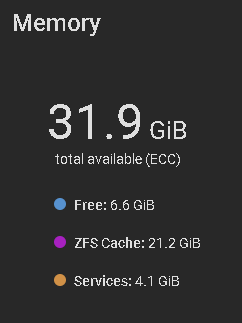

This is the result with 8GB allotted to running VMs.

At a minimum 1GB of memory per useable TB, plus additional memory if your system will also be hosting VM’s.

I have 2 TrueNAS Scale severs (Primary and Secondary).

My Primary has 24TB of storage (RaidZ2 5x8TB hdd) and 64GB memory.

My Secondary has 18TB of storage (RaidZ2 5x6TB hdd) and 32 GB memory.

why is this important?

The given reason is a few days back

missed that thanks for pointing it out for me.

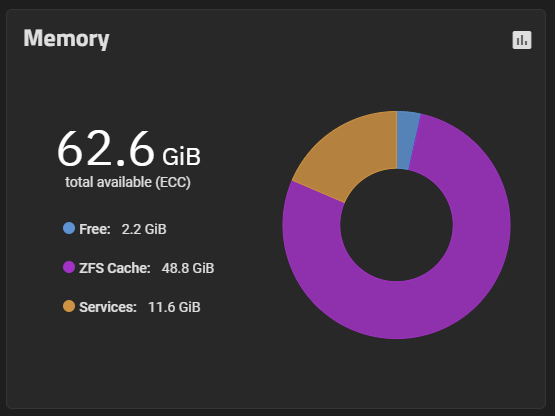

This post makes me wonder. Is the ZFS cache set by the amount of RAM available, amount of disk space, or how much activity is going on?

The amount of total RAM available to the system. ARC Cache will go until it hits a specific % of total, or whatever you’ve set as the manual max. It’ll also release memory fairly dynamically if anything else pops-up to avoid oom.

Also, OP didn’t ask people to specify which version of TrueNAS they were running.

The results are actually meaningless, except…

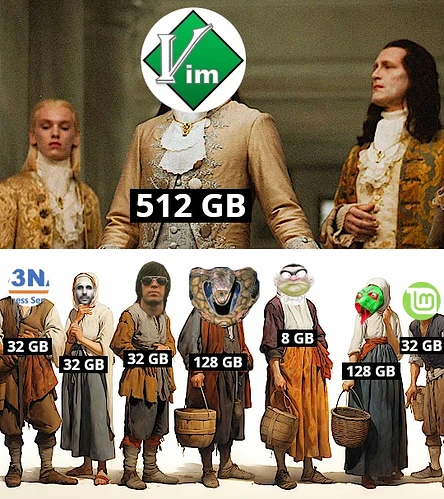

This picture makes me laugh with crying tears ![]()

![]()

But don’t forget to bow before @firesyde424 …