Prior to RAIDZ expansion, no. Once a vdev is created its size is static. Even with the vdev expansion, there are limitations and you won’t get the full size until you do a full rebalance, which would take you a significantly long time with that many disks especially if they’re double-digit TB disks. And not to mention, your pool will take a major hit while it’s doing that for a long time.

Hello is there a simple way to do full rebalance please ?

I had 3 18tb jbod on windows, with 24tb spread across 3 disks,

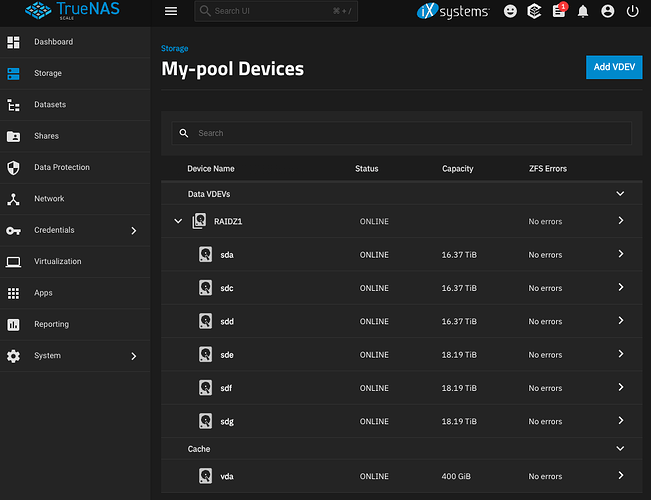

Bought 3 16tb drives and made a raidz1 with Truenas.

Then transfered data and added 3 18tb disks one by one to the 3 16tb vdev.

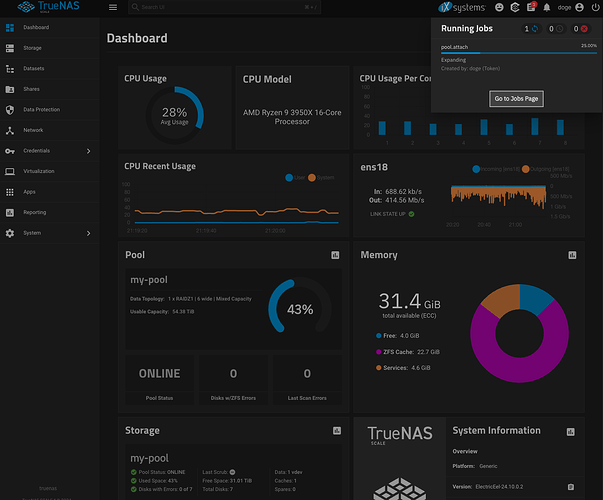

Now the last disk is in process of being added.

Would llike to do a full in place rebalance after that.

Also after adding two 18tb disks to a 3 * 16tb raidz1 a only have 54tb of usable capacity,

i expected 64tb (4* 16 = 64), this is a little disappointing.

Why ?

Best regards ![]()

To rebalance, run this:

As for space and capacity, reporting is WAY off in expanded raidz. Actual space is there, though.

would be nice to add this script as a feature directly in the GUI

and in my case truenas displays 54tb usable instead of 64tb, there is 10tb difference, this is huge, hope will be fixed one day

Talking of scripts, this one is also very useful:

An other feature that would be nice : notifications to Gotify instead of mail like in proxmox. Because mail box is full of spam, i like to have my notifications in separate place instead of mail box.

You can always write a feature request.

done :

What? I don’t think that’s the case. That is working as designed unless there has been an update that I didn’t know about. Refer to the very first post on this thread. For convenience, I posted it below.

It’s even mentioned also in the manuals here:

Existing data blocks retain their original data-to-parity ratio and block width, but spread across the larger set of disks. New data blocks adopt the new data-to-parity ratio and width. Because of this overhead, an extended RAIDZ VDEV can report a lower total capacity than a freshly created VDEV with the same number of disks.

I think people are naively expecting that RAIDZ expansion somehow will come at zero cost/overhead, which is honestly a bit ridiculous.

@etorix is right.

Raidz Expansion space accounting is incorrect. There is more free space available than indicated and it’s not just because existing data is stored with the old parity ratio.

Rebalancing will not correct.

This is the explanation

That’s interesting. judging from the below quote, it looks like it will be a long time before it’s fixed (if at all). Something to keep in mind for those planning on using RAIDZ starting with only a few disks at first cause this is a huge PITA IMO.

Unfortunately I don’t have much time left to spend on this project so I would have to leave this for a follow-on project. I think that it shouldn’t be much more work to do this as an extension, where we have RAIDZ Expansion for a while and then we add “RAIDZ Expansion improved space accounting”, and blocks written after that point would have the improved accounting (that is, assuming it’s possible at all).

I’m struggling to understand why a rebalance is necessary at all. Why would I need to be concerned that old data is still at the old parity ratio? What does this materially mean for me as a user? Do I miss out on space or does anything visibly change if you rebalance or does nothing actually happen if you don’t do it?

I’m perfectly happy with old data having different parity if over time new data just gets written with the new ratio.

Minimally, on the old data.

Skip rebalancing then - unless I’m wrong, there’d be no other benefit.

So if the old data is deleted (or just happens to be moved or copied to somewhere else) that space is reclaimed anyway?

My understanding is that rebalancing just copies the old data, then deletes the original, to reclaim the space. Hence, yes, if the old data is deleted/moved off the pool, then the space is reclaimed anyway.

You can use the link to the spreadsheet in this article and play with parameters to estimate the lost space:

https://louwrentius.com/zfs-raidz-expansion-is-awesome-but-has-a-small-caveat.html

The thing is, if one expands a pool that is already quite full, one drive at a time, fills the pool and repeat, the loss is quite significant, amounting to one full drive after a few iterations.

Sure, but deletes, copies, and moves of the data will naturally reclaim that space right? I can see the issue of all your data is static and never moves around or gets deleted but if you replace files or delete them over time then you’re just doing this in slow time right?

Yes. But a raidz array filled with only dynamic data does not look like a very realistic use case to me. Hoarding data, and adding one drive each time the pool reaches xx%, is a more plausible scenario; this one would benefit for occasional rebalancing—free space allowing.

We have an extension calculator tool tool based on the same algorithms on the Docs Hub as well:

It’s worth reading that article from louwrentius though, as it discusses reasons for preferring to rebalance manually as compared to Jim Salter’s recommendation to not worry about it. Ultimately, it’s a personal preference and there is no real need to choose either option.

I just upgraded to Fangtooth, I am hoping this has matured a bit by now.

Just one question though; It’s going to take several days to expand my vdev, and I saw the nag to update the pool to the current zfs version…

Anyone opinions about clicking the “Upgrade Pool” button while an expansion is in progress? I guess it should be fine; the pool is still online and doing its job while the expansion is on progress…