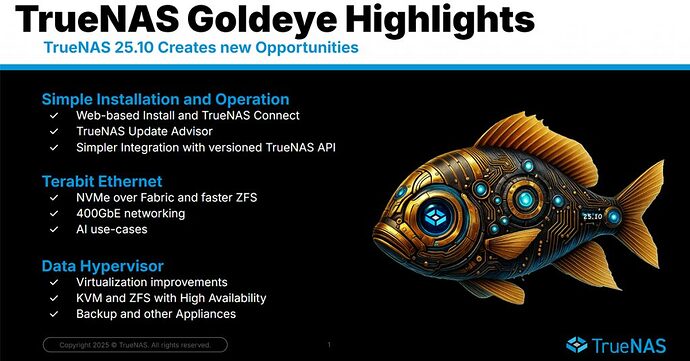

Since its initial release in April 2025, TrueNAS 25.04 “Fangtooth” has unified both TrueNAS CORE and SCALE into the new Community Edition, reaching over 130,000 systems and becoming the most popular version of TrueNAS in use. Today, we’re releasing the public beta of the next version, TrueNAS 25.10 “Goldeye” for the TrueNAS Community to begin testing, evaluating, and providing their valuable feedback on.

With dozens of new features and hundreds of fixes, TrueNAS “Goldeye” Testers are encouraged to take it through its paces as it continues to be refined for its October 2025 release. Full details are in the release notes on the TrueNAS Docs site, with some of the many highlights below!

Updated Linux Kernel and NVIDIA Blackwell Support

The Linux Long-Term Support (LTS) Kernel has been updated from 6.12.15 to 6.12.33, improving hardware compatibility and addressing edge-case performance issues while offering a more reliable and stable experience.

TrueNAS 25.10 now uses the NVIDIA Open Source GPU Kernel modules with the 570.172.08 driver, adding support for the latest NVIDIA GPUs including the RTX 50-series and RTX PRO Blackwell cards. With this change, NVIDIA has removed support for several older GTX GPUs. Please consult the list of compatible GPUs on NVIDIA’s GitHub repository and review the TrueNAS Community Forum thread to determine if your card is supported with the new Open Kernel module.

ZFS 2.3.3 Adds New Tools and Performance Boosts

ZFS File Rewrite is a TrueNAS-developed extension to OpenZFS 2.3.3, allowing datasets and files to be rewritten to your ZFS pool and updated with the latest changes made to vdev layout, compression algorithm changes, and deduplication functionality. With no interruption to standard file access, this command can be used as a method of rebalancing data after vdev addition or RAIDZ expansion, and has no impact on file modification time, ownership, or permissions. Goldeye will expose this capability from the TrueNAS CLI for advanced users.

Faster Caching is enabled through Adaptive Replacement Cache (ARC) improvements, including greater parallelization of operations and eviction speeds for data that is no longer valuable to be cached in RAM. High-performance systems with multiple cores and fast NVMe devices will be able to benefit most from these improvements.

DirectIO allows file protocols to avoid the ARC if caching does not improve performance for specific datasets. By avoiding extra memory copy routines for rapid pools and allowing a method to bypass cache for client workloads that know they will read data only once, TrueNAS can further optimize the contents of ARC, improving memory bandwidth and performance for specific High-Performance Computing (HPC) use-cases.

TrueNAS Versioned API Enhances Integration Options

A new, fully-versioned, and much faster JSON-RPC 2.0 over WebSocket implementation has been introduced with TrueNAS 25.10, with documentation available at api.truenas.com. The previous REST API has been deprecated, and will be fully removed in a future TrueNAS release.

This new versioned API will allow for predictable compatibility for software integrations across TrueNAS upgrades, including a Kubernetes Container Storage Interface (CSI), VMware vSphere plugin, Incus iSCSI connectivity, and Proxmox “ZFS-over-iSCSI” plugin, among others.

With the updated API capabilities, the TrueNAS Web UI becomes more responsive, displaying more accurate and up-to-date information, with lower overhead when generating reports or querying multiple elements across processor and pool statistics. Power users can leverage the updated TrueNAS CLI integration with the new API, allowing for simpler access from text-based consoles while still maintaining the same audit controls and TrueNAS tooling.

New TrueNAS Update Advisor and Profiles

Previously, TrueNAS users receiving an update notice in the Web UI had to visit the TrueNAS Software Status page to match their user profile with newly released versions, which sometimes led to confusion.

TrueNAS 25.10 overhauls the update process, with the ability to select your “User Profile” directly in the Web UI. Select the release timing that you’re interested in – General, Early Adopter, or Developer – and you’ll only be alerted for updates once they’re moved to the matching profile on the Software Status page.

A summary of the Release Notes of the update will also be provided in the Web UI itself, highlighting the key changes in a new release, with a link to the full Release Notes for those wanting to dig deeper.

Virtualization is Cleaner

TrueNAS 25.10-BETA includes separate tabs for its two different Virtualization solutions. The experimental lightweight Linux Containers (LXC) are available under the Instances tab, with full KVM-powered Virtual Machines (VM) available under the Virtualization tab.

Both tabs in the UI have been updated to be easier to navigate, and include access to a TrueNAS-managed catalog of easy-to-deploy VM and LXC template images. The Virtualization UI includes all previous functionality, such as PCI passthrough, Secure Boot support with virtual TPM devices, as well as new methods to import VMs from popular disk formats such as VMDK and QCOW2.

Migration of VMs from both the “Virtualization” and “Instances” tab – including the experimental Instance-powered VMs created in 25.04.0 and 25.04.1 – will be supported automatically. Configurations without PCI or USB passthrough are expected to migrate without issues. Some client operating systems inside the VM may require specific configurations prior to the upgrade in order to pre-load components such as virtual storage drivers to complete boot, or network reconfiguration if MAC addresses change on virtual NICs. Users with production VMs are recommended to verify compatibility and consider delaying their upgrade to 25.10-BETA until the process has been more robustly documented.

The full release of TrueNAS 25.10 will include our “Petabyte Hypervisor” making Virtualization available on TrueNAS Enterprise appliances with High Availability (HA), offering a platform for workloads that benefit from being close to high-performance storage. The same TrueNAS Enterprise appliance can continue to provide HA storage for traditional hypervisor environments powered by VMware, Proxmox, Hyper-V, XCP-ng, or other emerging technologies.

NVMe over Fabric takes Performance to the Next Level

Just as NVMe has revolutionized locally-attached solid state drives, remote storage is ready to move beyond the limitations of the SCSI protocol with NVMe over Fabric (NVMe-oF) options, extending the benefits of NVMe beyond the local PCI bus.

TrueNAS 25.10 retains both of its existing iSCSI and Fibre Channel block storage protocols, and adds two more NVMe-oF options:

NVMe/TCP leverages a TCP transport protocol similar to iSCSI, but uses NVMe commands and queueing to remove the overhead of SCSI. NVMe/TCP is broadly supported by most client operating systems, and is available in both TrueNAS Enterprise and Community Edition.

NVMe/RDMA enables the same NVMe commands to be transmitted through the RDMA over Converged Ethernet (RoCE) protocol, resulting in performance even greater than NVMe/TCP. Due to the direct memory copy access, network switch requirements, and specific NICs necessary, NVMe/RDMA is only supported on TrueNAS Enterprise in combination with the TrueNAS F-Series hardware.

More Web UI Improvements

The Goldeye Web UI has several improvements designed to make the user’s experience better, including:

- More logical page layouts for Storage, Networking, and Alerts

- Improved iSCSI Wizard workflow

- Enhanced YAML editor for custom Apps

- More responsive statistics monitoring (CPU, pool usage)

Our legacy name and logo of “iXsystems” has been officially retired from the UI as well – we’re unified at TrueNAS in the hardware, software, and support worlds.

Enabling Pool Migrations for Apps

Another frequent request was to allow for migration of Apps between pools without requiring manual reconfiguration. We’re pleased to announce that migration of Apps between pools on the same system is now available in TrueNAS 25.10, for users who’ve either outgrown the capacity or performance available on their existing configuration.

SMART gets SMARTer

SMART, the short-form of “Self-Monitoring, Analysis, and Reporting Technology” is the monitoring system included with storage devices to check their overall health, record statistics, and predict potential failures before they occur.

TrueNAS Goldeye automates the scheduling of SMART tests on supported devices, and reduces false positives in order to prevent alert fatigue and unnecessary e-waste from premature drive replacements.

TrueNAS Enterprise Appliances get Faster

Our line of TrueNAS Enterprise appliances are already showing benefits from the early Goldeye software as well:

- Higher capacities for both hybrid (30PB) and all-flash systems (20PB)

- Improved (STIG) Security for Defense-grade organizations

- Support for 400Gbps Ethernet interfaces

Additional improvements will be announced after further testing and validation. If your organization is interested in a TrueNAS appliance with existing or upcoming TrueNAS capabilities, please reach out to us, and we’ll be delighted to help you.

TrueNAS WebInstall and Dashboard

TrueNAS 25.10-BETA will also be the platform for testing the new WebInstall and Dashboard capabilities mentioned in the previous blog; however, this system is still in closed ALPHA testing. Community trials are expected to begin in late September 2025, and we’ll be excited to hear your feedback.

When Should You Migrate?

If you’re deploying a new TrueNAS system today, we recommend TrueNAS 25.04.2.1 for its maturity, Docker integration, and broad testing results. For current software recommendations to existing users, always review the Software Status page for recommendations based on your profile.

For enthusiastic testers running non-production workloads, TrueNAS 25.10 “Goldeye” is now in its BETA testing phase. Users with production workloads are advised to wait for the official RELEASE version in October. TrueNAS 25.10 will be recommended for our more conservative and Enterprise users with mission-critical needs in the first months of 2026.