hi! Im a complete noob and wanted to extend my current drive to a new disk.

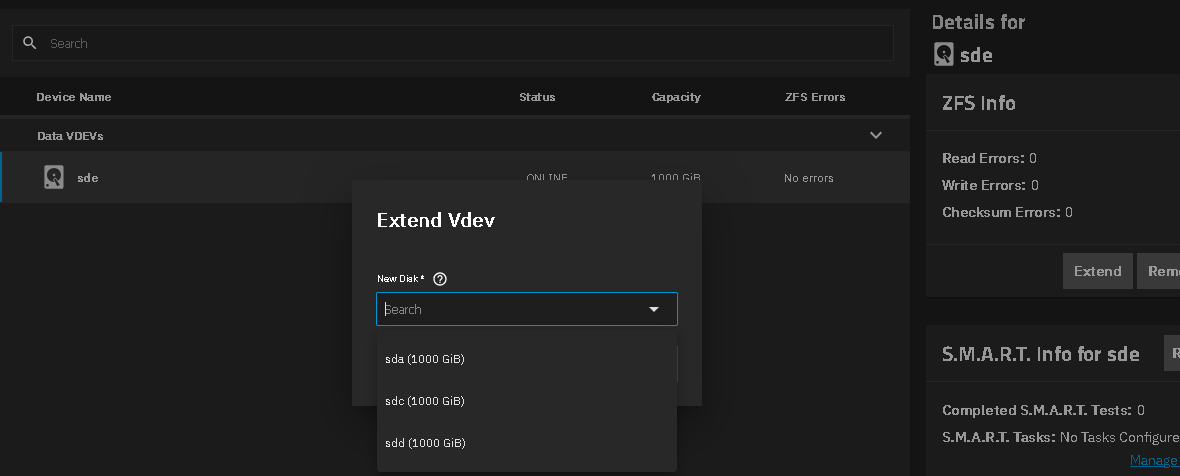

I think what happened is I went to extend and the new drive was not populated in the pick list for some reason so I went the other route of adding the drive to the pool which clearly is not what I intended as it eliminated the redundancy!

How do I remove the disk (sdb) from the pool and create a mirror of the first disk?

TrueNAS starts to remove the disk and then it throws this error:

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 71, in __zfs_vdev_operation

with libzfs.ZFS() as zfs:

File "libzfs.pyx", line 534, in libzfs.ZFS.__exit__

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 76, in __zfs_vdev_operation

op(target, *args)

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 89, in impl

getattr(target, op)()

File "libzfs.pyx", line 2386, in libzfs.ZFSVdev.remove

libzfs.ZFSException: cannot remove /dev/disk/by-partuuid/c71c5a2d-5fa6-4a10-992c-5e2e1bae3c90: permission denied

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/lib/python3.11/concurrent/futures/process.py", line 261, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 116, in main_worker

res = MIDDLEWARE._run(*call_args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 47, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/worker.py", line 41, in _call

return methodobj(*params)

^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 178, in nf

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 125, in remove

self.detach_remove_impl('remove', name, label, options)

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 92, in detach_remove_impl

self.__zfs_vdev_operation(name, label, impl)

File "/usr/lib/python3/dist-packages/middlewared/plugins/zfs_/pool_actions.py", line 78, in __zfs_vdev_operation

raise CallError(str(e), e.code)

middlewared.service_exception.CallError: [EZFS_PERM] cannot remove /dev/disk/by-partuuid/c71c5a2d-5fa6-4a10-992c-5e2e1bae3c90: permission denied

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 515, in run

await self.future

File "/usr/lib/python3/dist-packages/middlewared/job.py", line 560, in __run_body

rv = await self.method(*args)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 174, in nf

return await func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/schema/processor.py", line 48, in nf

res = await f(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/plugins/pool_/pool_disk_operations.py", line 229, in remove

await self.middleware.call('zfs.pool.remove', pool['name'], found[1]['guid'])

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1005, in call

return await self._call(

^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 728, in _call

return await self._call_worker(name, *prepared_call.args)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 734, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 640, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/usr/lib/python3/dist-packages/middlewared/main.py", line 624, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

middlewared.service_exception.CallError: [EZFS_PERM] cannot remove /dev/disk/by-partuuid/c71c5a2d-5fa6-4a10-992c-5e2e1bae3c90: permission denied

I have a snapshot of Pool1 (created in the GUI - is this the same as a checkpoint?) and the TrueNAS configuration file before adding the 2nd disk as an attempt to capture the state of the system before I made this change. Will this help us roll back?