I have been looking at ways to speed up file transfer speeds between my pc and Truenas ElectricEel-24.10.2.4, and any other device.

I have changed the lan cable to a Cat6a, also changed my failing Netgear 1Gb switch to a TP Link 2.5Gb switch, but the transfer speed between my pc and Truenas is only around 65mb/sec

The specs are:

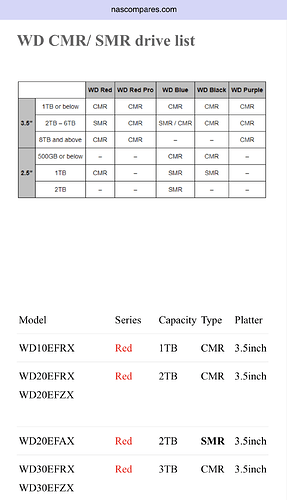

x5 2Tb Western Digital all in 1 pool

16Gb of Kingston Value ram ECC

SuperMicro X95CM-F motherboard with Gigabit lan ports

I googled how to do what I want and basically it says to enable mutichannel in advanced settings of SMB. When I do that I get an error:

NetBIOS names may not be one of following reserved names: dialup, batch, gw, world, null, local, enterprise, builtin, anonymous, domain, self, interactive, network, gateway, proxy, server, restricted, users, authenticated user, internet, tac

So I guess it’s not a simple job then.

Edit:

I found a link to the TrueNas documentation on setting up SMB mutichannel, but if there is any other way of speeding up file transfers , I’d rather go that way if possible

Thanks