@bkindel I had meant for you to send it as a PM, so as to not put all of your information into the public’s eye. I flagged your post for moderation to edit out the link and I can confirm I have the debug file.

This looks like it’s been happening since 8/27

2024-08-27.03:50:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.03:50:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.04:55:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.04:55:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.06:00:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.06:00:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.07:05:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.07:05:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.08:10:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.08:10:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.09:15:47 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.09:15:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.10:20:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.10:20:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.11:25:47 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.11:25:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.12:30:47 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.12:30:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.13:35:46 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.13:35:47 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.14:40:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.14:40:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.15:45:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.15:45:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.16:50:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.16:50:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.17:55:47 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.17:55:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.19:00:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.19:00:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.20:05:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.20:05:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.21:10:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.21:10:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.22:15:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.22:15:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-27.23:20:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-27.23:20:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.00:25:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.00:25:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.01:30:48 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.01:30:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.02:35:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.02:35:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.03:40:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.03:40:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.04:45:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.04:45:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.05:50:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.05:50:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.06:55:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.06:55:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.08:00:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.08:00:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.09:05:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.09:05:52 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.10:10:52 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.10:10:52 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.11:15:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.11:15:52 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.12:20:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.12:20:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.12:48:20 zfs destroy POOL-4x5TB/.system/samba4@update--2023-02-16-01-48--12.0-U5.1

2024-08-28.12:48:20 zfs snapshot POOL-4x5TB/.system/samba4@update--2024-08-28-17-48--13.0-U6.1

2024-08-28.12:50:14 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.12:50:14 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.13:25:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.13:25:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.14:30:53 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.14:30:53 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.15:35:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.15:35:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.16:40:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.16:40:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.17:45:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.17:45:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.18:50:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.18:50:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.19:55:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.19:55:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.20:30:09 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.20:30:09 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.21:00:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.21:00:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.22:05:49 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.22:05:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-28.23:10:51 zpool import 11721839244249075587 POOL-4x5TB

2024-08-28.23:10:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.00:15:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.00:15:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.01:20:50 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.01:20:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.02:26:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.02:26:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.03:31:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.03:31:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.04:36:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.04:36:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.05:41:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.05:41:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.06:46:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.06:46:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.07:51:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.07:51:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.08:56:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.08:56:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.10:01:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.10:01:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.11:06:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.11:06:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.12:11:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.12:11:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.13:16:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.13:16:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.14:21:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.14:21:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.15:26:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.15:26:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.16:31:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.16:31:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.17:36:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.17:36:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.18:41:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.18:41:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.19:46:00 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.19:46:00 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.20:51:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.20:51:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.21:56:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.21:56:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-29.23:01:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-29.23:01:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.00:06:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.00:06:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.01:11:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.01:11:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.02:16:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.02:16:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.03:21:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.03:21:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.04:26:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.04:26:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.05:31:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.05:31:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.06:36:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.06:36:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.07:41:01 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.07:41:01 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.08:11:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.08:11:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.08:46:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.08:46:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.09:51:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.09:51:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.10:56:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.10:56:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.12:01:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.12:01:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.13:06:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.13:06:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.13:40:59 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.13:40:59 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.14:11:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.14:11:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.15:16:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.15:16:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.16:21:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.16:21:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.17:26:02 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.17:26:02 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.18:31:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.18:31:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.19:36:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.19:36:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.20:41:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.20:41:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.21:46:03 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.21:46:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.22:51:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.22:51:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-30.23:56:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-30.23:56:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.01:01:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.01:01:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.02:06:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.02:06:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.03:11:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.03:11:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.04:16:06 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.04:16:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.05:21:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.05:21:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.06:26:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.06:26:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.07:31:06 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.07:31:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.08:14:36 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.08:14:36 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.08:36:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.08:36:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.09:41:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.09:41:04 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.10:46:06 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.10:46:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.11:51:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.11:51:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.12:56:04 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.12:56:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.14:01:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.14:01:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.15:06:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.15:06:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.16:11:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.16:11:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.17:16:05 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.17:16:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.18:21:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.18:21:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.19:26:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.19:26:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.20:31:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.20:31:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.21:36:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.21:36:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.22:41:06 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.22:41:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-08-31.23:46:07 zpool import 11721839244249075587 POOL-4x5TB

2024-08-31.23:46:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.00:00:03 zpool scrub POOL-4x5TB

2024-09-01.00:51:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.00:51:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.01:56:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.01:56:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.03:01:15 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.03:01:15 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.04:06:17 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.04:06:17 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.05:11:22 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.05:11:22 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.06:16:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.06:16:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.07:21:21 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.07:21:21 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.08:26:18 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.08:26:18 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.09:31:20 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.09:31:20 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.10:36:17 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.10:36:17 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.11:41:37 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.11:41:37 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.15:35:35 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.15:35:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.16:50:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.16:50:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.17:06:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.17:06:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.18:11:45 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.18:11:45 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.13:55:47 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.13:55:47 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.19:16:10 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.19:16:10 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.14:57:17 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.14:57:17 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.20:21:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.20:21:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-01.21:26:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-01.21:26:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.08:52:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.08:52:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.09:36:10 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.09:36:10 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.10:41:13 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.10:41:13 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.11:46:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.11:46:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.12:51:17 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.12:51:17 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.13:56:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.13:56:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.15:01:18 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.15:01:18 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.16:06:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.16:06:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.17:11:19 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.17:11:19 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.18:16:22 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.18:16:22 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.19:21:21 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.19:21:21 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.20:26:25 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.20:26:25 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-02.21:31:26 zpool import 11721839244249075587 POOL-4x5TB

2024-09-02.21:31:26 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.07:37:05 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.07:37:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.08:21:37 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.08:21:37 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.09:26:15 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.09:26:15 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.10:31:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.10:31:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.11:36:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.11:36:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.12:41:22 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.12:41:22 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.13:46:25 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.13:46:25 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.14:51:26 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.14:51:26 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.15:56:35 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.15:56:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.16:02:19 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.16:02:19 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.17:01:39 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.17:01:39 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.18:25:42 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.18:25:42 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.19:11:20 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.19:11:20 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.20:16:15 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.20:16:15 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.21:21:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.21:21:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.22:26:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.22:26:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-03.23:31:21 zpool import 11721839244249075587 POOL-4x5TB

2024-09-03.23:31:21 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.00:36:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.00:36:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.01:41:47 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.01:41:47 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.02:46:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.02:46:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.03:51:15 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.03:51:15 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.04:56:23 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.04:56:23 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.06:01:26 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.06:01:26 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.07:06:24 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.07:06:24 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.08:11:19 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.08:11:19 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.09:16:24 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.09:16:24 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.10:21:53 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.10:21:53 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.11:26:39 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.11:26:39 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.12:31:22 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.12:31:22 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.13:36:21 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.13:36:21 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.14:41:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.14:41:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.16:03:36 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.16:03:36 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.16:51:17 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.16:51:17 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.17:12:20 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.17:12:20 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.17:56:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.17:56:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.19:01:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.19:01:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-04.20:06:45 zpool import 11721839244249075587 POOL-4x5TB

2024-09-04.20:06:45 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.07:40:18 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.07:40:18 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.08:01:40 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.08:01:40 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.09:06:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.09:06:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.10:11:48 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.10:11:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.10:43:08 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.10:43:08 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.11:16:39 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.11:16:39 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.12:21:40 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.12:21:40 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.13:26:52 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.13:26:52 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.14:13:35 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.14:13:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.14:31:51 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.14:31:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.15:36:54 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.15:36:54 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.16:54:11 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.16:54:11 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.17:46:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.17:46:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.18:51:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.18:51:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.19:56:36 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.19:56:36 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.21:01:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.21:01:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-05.22:06:53 zpool import 11721839244249075587 POOL-4x5TB

2024-09-05.22:06:53 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.07:41:50 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.07:41:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.07:51:36 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.07:51:36 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.08:56:51 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.08:56:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.10:01:37 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.10:01:37 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.11:06:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.11:06:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.11:38:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.11:38:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.12:11:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.12:11:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.13:16:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.13:16:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.14:21:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.14:21:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.15:26:51 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.15:26:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.16:31:54 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.16:31:54 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.17:36:59 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.17:36:59 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.18:41:58 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.18:41:58 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.19:46:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.19:46:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.20:26:10 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.20:26:10 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.20:51:38 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.20:51:38 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.22:22:07 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.22:22:07 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-06.23:01:53 zpool import 11721839244249075587 POOL-4x5TB

2024-09-06.23:01:53 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.14:53:12 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.14:53:12 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.15:52:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.15:52:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.16:44:49 zpool scrub -s POOL-4x5TB

2024-09-07.16:57:48 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.16:57:48 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.18:02:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.18:02:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.19:07:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.19:07:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.20:12:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.20:12:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.21:17:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.21:17:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.22:22:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.22:22:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-07.23:27:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-07.23:27:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.00:32:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.00:32:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.01:37:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.01:37:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.02:42:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.02:42:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.03:47:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.03:47:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.04:52:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.04:52:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.05:57:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.05:57:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.07:02:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.07:02:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.08:07:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.08:07:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.09:12:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.09:12:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.10:17:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.10:17:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.11:22:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.11:22:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.12:27:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.12:27:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.16:13:03 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.16:13:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.16:32:28 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.16:32:28 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.17:40:49 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.17:40:49 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.18:42:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.18:42:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.19:47:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.19:47:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.20:19:05 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.20:19:05 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.20:52:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.20:52:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.20:54:26 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.20:54:26 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.20:56:50 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.20:56:50 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.21:57:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.21:57:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.21:59:38 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.21:59:38 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.22:02:25 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.22:02:25 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.22:04:43 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.22:04:43 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.23:02:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.23:02:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.23:05:19 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.23:05:19 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-08.23:06:54 zpool import 11721839244249075587 POOL-4x5TB

2024-09-08.23:06:54 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.07:45:51 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.07:45:51 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.07:51:09 zpool scrub POOL-4x5TB

2024-09-09.08:47:46 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.08:47:46 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.08:49:13 zpool scrub -s POOL-4x5TB

2024-09-09.08:49:23 zpool scrub POOL-4x5TB

2024-09-09.09:53:08 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.09:53:08 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.10:52:44 zpool scrub -p POOL-4x5TB

2024-09-09.10:57:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.10:57:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.11:55:33 zpool scrub -p POOL-4x5TB

2024-09-09.11:57:10 zpool scrub -p POOL-4x5TB

2024-09-09.12:02:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.12:02:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.13:08:03 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.13:08:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.14:13:06 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.14:13:06 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.15:18:03 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.15:18:03 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.16:22:47 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.16:22:47 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.17:27:43 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.17:27:43 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.18:32:40 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.18:32:40 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.19:38:27 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.19:38:27 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-09.20:42:57 zpool import 11721839244249075587 POOL-4x5TB

2024-09-09.20:42:57 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.07:42:53 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.07:42:53 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.08:37:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.08:37:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.09:42:41 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.09:42:41 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.10:19:31 zpool scrub -s POOL-4x5TB

2024-09-10.10:47:52 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.10:47:52 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.11:52:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.11:52:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.12:57:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.12:57:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.14:02:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.14:02:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.15:07:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.15:07:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.16:12:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.16:12:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.17:17:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.17:17:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.18:22:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.18:22:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.19:27:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.19:27:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.20:32:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.20:32:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-10.21:37:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-10.21:37:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.08:02:46 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.08:02:46 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.08:27:29 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.08:27:29 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.09:32:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.09:32:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.10:37:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.10:37:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.11:42:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.11:42:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.12:47:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.12:47:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.13:52:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.13:52:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.14:57:30 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.14:57:30 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.16:02:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.16:02:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.17:07:31 zpool import 11721839244249075587 POOL-4x5TB

2024-09-11.17:07:31 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-11.20:27:49 zpool scrub POOL-4x5TB

2024-09-15.17:20:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.17:20:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.18:25:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.18:25:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.19:30:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.19:30:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.20:35:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.20:35:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.21:40:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.21:40:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.22:45:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.22:45:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-15.23:50:35 zpool import 11721839244249075587 POOL-4x5TB

2024-09-15.23:50:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.00:55:36 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.00:55:36 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.02:00:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.02:00:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.03:05:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.03:05:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.04:10:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.04:10:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.05:15:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.05:15:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.06:20:32 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.06:20:32 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.07:25:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.07:25:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.08:30:33 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.08:30:33 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.09:35:45 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.09:35:45 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.10:40:34 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.10:40:34 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

2024-09-16.11:45:35 zpool import 11721839244249075587 POOL-4x5TB

2024-09-16.11:45:35 zpool set cachefile=/data/zfs/zpool.cache POOL-4x5TB

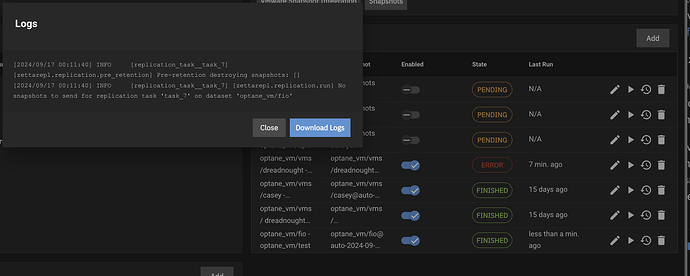

There is evidence that at least some of the reboots are expected. In the past month or so there are 21 shutdown and 4 reboots issued by root in the auth.log file. Here’s some examples:

Sep 6 11:33:16 bksvr 1 2024-09-06T11:33:16.090048-05:00 bksvr.local shutdown 3530 - - reboot by root:

Sep 6 11:33:16 bksvr 1 2024-09-06T11:33:16.446385-05:00 bksvr.local sshd 1186 - - Received signal 15; terminating.

Sep 6 11:38:39 bksvr 1 2024-09-06T11:38:39.711414-05:00 bksvr.local sshd 1198 - - Server listening on :: port 22.

Sep 6 11:38:39 bksvr 1 2024-09-06T11:38:39.711587-05:00 bksvr.local sshd 1198 - - Server listening on 0.0.0.0 port 22.

Sep 6 12:11:53 bksvr 1 2024-09-06T12:11:53.603951-05:00 bksvr.local sshd 1186 - - Server listening on :: port 22.

Sep 6 12:11:53 bksvr 1 2024-09-06T12:11:53.604124-05:00 bksvr.local sshd 1186 - - Server listening on 0.0.0.0 port 22.

Sep 6 13:16:43 bksvr 1 2024-09-06T13:16:43.239144-05:00 bksvr.local sshd 1186 - - Server listening on :: port 22.

Sep 6 13:16:43 bksvr 1 2024-09-06T13:16:43.239317-05:00 bksvr.local sshd 1186 - - Server listening on 0.0.0.0 port 22.

Sep 6 14:21:42 bksvr 1 2024-09-06T14:21:42.037061-05:00 bksvr.local sshd 1186 - - Server listening on :: port 22.

Sep 6 14:21:42 bksvr 1 2024-09-06T14:21:42.037288-05:00 bksvr.local sshd 1186 - - Server listening on 0.0.0.0 port 22.

Sep 6 15:27:01 bksvr 1 2024-09-06T15:27:01.344073-05:00 bksvr.local sshd 1198 - - Server listening on :: port 22.

Sep 6 15:27:01 bksvr 1 2024-09-06T15:27:01.344229-05:00 bksvr.local sshd 1198 - - Server listening on 0.0.0.0 port 22.

Sep 6 16:32:09 bksvr 1 2024-09-06T16:32:09.304591-05:00 bksvr.local sshd 1164 - - Server listening on :: port 22.

Sep 6 16:32:09 bksvr 1 2024-09-06T16:32:09.304748-05:00 bksvr.local sshd 1164 - - Server listening on 0.0.0.0 port 22.

Sep 6 17:37:11 bksvr 1 2024-09-06T17:37:11.889149-05:00 bksvr.local sshd 1191 - - Server listening on :: port 22.

Sep 6 17:37:11 bksvr 1 2024-09-06T17:37:11.889313-05:00 bksvr.local sshd 1191 - - Server listening on 0.0.0.0 port 22.

Sep 6 18:42:08 bksvr 1 2024-09-06T18:42:08.731257-05:00 bksvr.local sshd 1198 - - Server listening on :: port 22.

Sep 6 18:42:08 bksvr 1 2024-09-06T18:42:08.731411-05:00 bksvr.local sshd 1198 - - Server listening on 0.0.0.0 port 22.

Sep 6 19:46:47 bksvr 1 2024-09-06T19:46:47.554856-05:00 bksvr.local sshd 1186 - - Server listening on :: port 22.

Sep 6 19:46:47 bksvr 1 2024-09-06T19:46:47.555030-05:00 bksvr.local sshd 1186 - - Server listening on 0.0.0.0 port 22.

Sep 6 20:10:27 bksvr 1 2024-09-06T20:10:27.464739-05:00 bksvr.local shutdown 3493 - - power-down by root:

Sep 6 20:10:27 bksvr 1 2024-09-06T20:10:27.823650-05:00 bksvr.local sshd 1186 - - Received signal 15; terminating.

Sep 6 20:26:23 bksvr 1 2024-09-06T20:26:23.042884-05:00 bksvr.local sshd 1196 - - Server listening on :: port 22.

Sep 6 20:26:23 bksvr 1 2024-09-06T20:26:23.043066-05:00 bksvr.local sshd 1196 - - Server listening on 0.0.0.0 port 22.

Sep 6 20:51:55 bksvr 1 2024-09-06T20:51:55.509411-05:00 bksvr.local sshd 1183 - - Server listening on :: port 22.

Sep 6 20:51:55 bksvr 1 2024-09-06T20:51:55.509596-05:00 bksvr.local sshd 1183 - - Server listening on 0.0.0.0 port 22.

Sep 6 21:25:06 bksvr 1 2024-09-06T21:25:06.395384-05:00 bksvr.local login 4317 - - login on pts/0 as root

Sep 6 22:22:25 bksvr 1 2024-09-06T22:22:25.134362-05:00 bksvr.local sshd 1183 - - Server listening on :: port 22.

Sep 6 22:22:25 bksvr 1 2024-09-06T22:22:25.134524-05:00 bksvr.local sshd 1183 - - Server listening on 0.0.0.0 port 22.

Sep 6 23:02:03 bksvr 1 2024-09-06T23:02:03.664568-05:00 bksvr.local sshd 1198 - - Server listening on :: port 22.

Sep 6 23:02:03 bksvr 1 2024-09-06T23:02:03.664730-05:00 bksvr.local sshd 1198 - - Server listening on 0.0.0.0 port 22.

Sep 6 23:16:49 bksvr 1 2024-09-06T23:16:49.433573-05:00 bksvr.local shutdown 3377 - - power-down by root:

If it’s not that (like something telling the NAS to turn off over SSH) then I would suggest maybe looking to replace your PSU. I don’t see any kernel panics or anything interesting in messages before or after a reboot, so I would suspect it’s just losing power and coming back.

In all honestly, I’ve had a problem in the past where a compressor would turn on for an A/C and this would cause voltage to sag on a circuit and cause a desktop computer to reboot. Weird things happen.

The consistency and frequency of this issue is interesting. I suggested this because the trend absolutely seems to begin at 8/27. Can you think of any major appliances or things that draw alot of power that may have been introduced to the same circuit the NAS is in?

Thanks again @NickF1227!

Yes - since 8/27 sounds right. The shutdowns/reboots you referenced were by me, and are expected. (I’ve been working on trying to resolve since this started happening.)

I have already replaced the PSU (along with several other hardware items noted above).

As mentioned, I’m pretty positive the root-cause is something with the pool (config, etc.) or one of the drives in the pool. If I unplug the 4 drives in the pool and boot the system, it is stable without reboots.

No changes that I am aware of that coincide with the 8/27 date when this started occurring.

If that were the case, I would expect to see some evidence of that from the kernel logs. I don’t see that. The panic screenshot you posted is unfortunately not written out to logs, likely because of the failure mode. This makes it harder.

The pool did scrub recently, and shows healthy

2024-09-11.20:27:49 zpool scrub POOL-4x5TB

+--------------------------------------------------------------------------------+

+ zpool status -v @1726506632 +

+--------------------------------------------------------------------------------+

pool: POOL-4x5TB

state: ONLINE

scan: scrub repaired 0B in 03:06:22 with 0 errors on Wed Sep 11 23:34:00 2024

config:

NAME STATE READ WRITE CKSUM

POOL-4x5TB ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/84067a89-eb53-11e6-ad13-1866da48e055 ONLINE 0 0 0

gptid/84c356a5-eb53-11e6-ad13-1866da48e055 ONLINE 0 0 0

gptid/8574e059-eb53-11e6-ad13-1866da48e055 ONLINE 0 0 0

gptid/862355a8-eb53-11e6-ad13-1866da48e055 ONLINE 0 0 0

errors: No known data errors

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0B in 00:00:49 with 0 errors on Thu Sep 12 03:45:49 2024

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

ada4p2 ONLINE 0 0 0

errors: No known data errors

debug finished in 0 seconds for zpool status -v

or one of the drives in the pool. If I unplug the 4 drives in the pool and boot the system, it is stable without reboots.

I do note that one of your drives appears to be bad, and is likely a cause for concern

+--------------------------------------------------------------------------------+

+ SMARTD Boot Status @1726506625 +

+--------------------------------------------------------------------------------+

SMARTD will not start on boot.

debug finished in 0 seconds for SMARTD Boot Status

+--------------------------------------------------------------------------------+

+ SMARTD Run Status @1726506625 +

+--------------------------------------------------------------------------------+

smartd_daemon is not running.

debug finished in 0 seconds for SMARTD Run Status

+--------------------------------------------------------------------------------+

+ Scheduled SMART Jobs @1726506625 +

+--------------------------------------------------------------------------------+

debug finished in 0 seconds for Scheduled SMART Jobs

+--------------------------------------------------------------------------------+

+ Disks being checked by SMART @1726506625 +

+--------------------------------------------------------------------------------+

debug finished in 0 seconds for Disks being checked by SMART

+--------------------------------------------------------------------------------+

+ smartctl output @1726506625 +

+--------------------------------------------------------------------------------+

/dev/ada0

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Toshiba X300

Device Model: TOSHIBA HDWE150

Serial Number: 66GEKDMTF57D

LU WWN Device Id: 5 000039 71bd81e97

Firmware Version: FP2A

User Capacity: 5,000,981,078,016 bytes [5.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS (minor revision not indicated)

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Sep 16 12:10:25 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART Status command failed

Please get assistance from https://www.smartmontools.org/

Register values returned from SMART Status command are:

ERR=0x00, SC=0x00, LL=0x00, LM=0x00, LH=0x00, DEV=...., STS=....

SMART Status not supported: Invalid ATA output register values

SMART overall-health self-assessment test result: PASSED

Warning: This result is based on an Attribute check.

General SMART Values:

Offline data collection status: (0x85) Offline data collection activity

was aborted by an interrupting command from host.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 120) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 539) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 050 Pre-fail Offline - 0

3 Spin_Up_Time 0x0027 100 100 001 Pre-fail Always - 8891

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 161

5 Reallocated_Sector_Ct 0x0033 100 100 050 Pre-fail Always - 20072

7 Seek_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 050 Pre-fail Offline - 0

9 Power_On_Hours 0x0032 001 001 000 Old_age Always - 66837

10 Spin_Retry_Count 0x0033 103 100 030 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 161

191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 1209

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 111

193 Load_Cycle_Count 0x0032 100 100 000 Old_age Always - 1144

194 Temperature_Celsius 0x0022 100 100 000 Old_age Always - 44 (Min/Max 20/57)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 2493

197 Current_Pending_Sector 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 253 000 Old_age Always - 0

220 Disk_Shift 0x0002 100 100 000 Old_age Always - 0

222 Loaded_Hours 0x0032 001 001 000 Old_age Always - 66595

223 Load_Retry_Count 0x0032 100 100 000 Old_age Always - 0

224 Load_Friction 0x0022 100 100 000 Old_age Always - 0

226 Load-in_Time 0x0026 100 100 000 Old_age Always - 194

240 Head_Flying_Hours 0x0001 100 100 001 Pre-fail Offline - 0

SMART Error Log Version: 1

ATA Error Count: 2

CR = Command Register [HEX]

FR = Features Register [HEX]

SC = Sector Count Register [HEX]

SN = Sector Number Register [HEX]

CL = Cylinder Low Register [HEX]

CH = Cylinder High Register [HEX]

DH = Device/Head Register [HEX]

DC = Device Command Register [HEX]

ER = Error register [HEX]

ST = Status register [HEX]

Powered_Up_Time is measured from power on, and printed as

DDd+hh:mm:SS.sss where DD=days, hh=hours, mm=minutes,

SS=sec, and sss=millisec. It "wraps" after 49.710 days.

Error 2 occurred at disk power-on lifetime: 58379 hours (2432 days + 11 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

04 31 00 80 9c 6c 48

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

ec 00 00 00 00 00 40 00 12d+01:57:50.785 IDENTIFY DEVICE

aa aa aa aa aa aa aa ff 12d+01:57:50.578 [RESERVED]

ea 00 00 00 00 00 40 00 12d+01:57:15.556 FLUSH CACHE EXT

61 20 f0 38 f7 56 40 00 12d+01:57:15.555 WRITE FPDMA QUEUED

61 08 e8 d8 86 bd 40 00 12d+01:57:15.555 WRITE FPDMA QUEUED

Error 1 occurred at disk power-on lifetime: 42314 hours (1763 days + 2 hours)

When the command that caused the error occurred, the device was active or idle.

After command completion occurred, registers were:

ER ST SC SN CL CH DH

-- -- -- -- -- -- --

04 31 00 a8 24 41 40

Commands leading to the command that caused the error were:

CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name

-- -- -- -- -- -- -- -- ---------------- --------------------

ea 00 00 00 00 00 40 00 00:36:11.875 FLUSH CACHE EXT

61 08 98 e8 3d 44 40 00 00:36:11.875 WRITE FPDMA QUEUED

61 10 90 c8 03 41 40 00 00:36:11.875 WRITE FPDMA QUEUED

61 10 88 b0 03 41 40 00 00:36:11.874 WRITE FPDMA QUEUED

61 08 80 a0 03 41 40 00 00:36:11.874 WRITE FPDMA QUEUED

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Completed without error 00% 18120 -

# 2 Short offline Completed without error 00% 18035 -

# 3 Short offline Completed without error 00% 671 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

/dev/ada3

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Toshiba X300

Device Model: TOSHIBA HDWE150

Serial Number: 66FAK8T7F57D

LU WWN Device Id: 5 000039 71bb81f64

Firmware Version: FP2A

User Capacity: 5,000,981,078,016 bytes [5.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS (minor revision not indicated)

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Sep 16 12:10:25 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x85) Offline data collection activity

was aborted by an interrupting command from host.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 120) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 547) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 050 Pre-fail Offline - 0

3 Spin_Up_Time 0x0027 100 100 001 Pre-fail Always - 8578

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 142

5 Reallocated_Sector_Ct 0x0033 100 100 050 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 050 Pre-fail Offline - 0

9 Power_On_Hours 0x0032 001 001 000 Old_age Always - 66381

10 Spin_Retry_Count 0x0033 102 100 030 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 142

191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 236

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 97

193 Load_Cycle_Count 0x0032 100 100 000 Old_age Always - 155

194 Temperature_Celsius 0x0022 100 100 000 Old_age Always - 40 (Min/Max 19/62)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

220 Disk_Shift 0x0002 100 100 000 Old_age Always - 0

222 Loaded_Hours 0x0032 001 001 000 Old_age Always - 66372

223 Load_Retry_Count 0x0032 100 100 000 Old_age Always - 0

224 Load_Friction 0x0022 100 100 000 Old_age Always - 0

226 Load-in_Time 0x0026 100 100 000 Old_age Always - 225

240 Head_Flying_Hours 0x0001 100 100 001 Pre-fail Offline - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 65535 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

/dev/ada2

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Toshiba X300

Device Model: TOSHIBA HDWE150

Serial Number: 86G2KJ16F57D

LU WWN Device Id: 5 000039 73b781ce2

Firmware Version: FP2A

User Capacity: 5,000,981,078,016 bytes [5.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS (minor revision not indicated)

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Sep 16 12:10:26 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x85) Offline data collection activity

was aborted by an interrupting command from host.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 40) The self-test routine was interrupted

by the host with a hard or soft reset.

Total time to complete Offline

data collection: ( 120) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 535) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 050 Pre-fail Offline - 0

3 Spin_Up_Time 0x0027 100 100 001 Pre-fail Always - 8608

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 154

5 Reallocated_Sector_Ct 0x0033 100 100 050 Pre-fail Always - 488

7 Seek_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 050 Pre-fail Offline - 0

9 Power_On_Hours 0x0032 001 001 000 Old_age Always - 66298

10 Spin_Retry_Count 0x0033 103 100 030 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 154

191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 297

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 107

193 Load_Cycle_Count 0x0032 100 100 000 Old_age Always - 159

194 Temperature_Celsius 0x0022 100 100 000 Old_age Always - 42 (Min/Max 20/60)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 59

197 Current_Pending_Sector 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

220 Disk_Shift 0x0002 100 100 000 Old_age Always - 0

222 Loaded_Hours 0x0032 001 001 000 Old_age Always - 66298

223 Load_Retry_Count 0x0032 100 100 000 Old_age Always - 0

224 Load_Friction 0x0022 100 100 000 Old_age Always - 0

226 Load-in_Time 0x0026 100 100 000 Old_age Always - 556

240 Head_Flying_Hours 0x0001 100 100 001 Pre-fail Offline - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Interrupted (host reset) 80% 65535 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

/dev/ada1

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Model Family: Toshiba X300

Device Model: TOSHIBA HDWE150

Serial Number: 66G8K0EPF57D

LU WWN Device Id: 5 000039 71ba81aee

Firmware Version: FP2A

User Capacity: 5,000,981,078,016 bytes [5.00 TB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: 7200 rpm

Form Factor: 3.5 inches

Device is: In smartctl database [for details use: -P show]

ATA Version is: ATA8-ACS (minor revision not indicated)

SATA Version is: SATA 3.0, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Sep 16 12:10:26 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x85) Offline data collection activity

was aborted by an interrupting command from host.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 41) The self-test routine was interrupted

by the host with a hard or soft reset.

Total time to complete Offline

data collection: ( 120) seconds.

Offline data collection

capabilities: (0x5b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

No Conveyance Self-test supported.

Selective Self-test supported.

SMART capabilities: (0x0003) Saves SMART data before entering

power-saving mode.

Supports SMART auto save timer.

Error logging capability: (0x01) Error logging supported.

General Purpose Logging supported.

Short self-test routine

recommended polling time: ( 2) minutes.

Extended self-test routine

recommended polling time: ( 525) minutes.

SCT capabilities: (0x003d) SCT Status supported.

SCT Error Recovery Control supported.

SCT Feature Control supported.

SCT Data Table supported.

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

2 Throughput_Performance 0x0005 100 100 050 Pre-fail Offline - 0

3 Spin_Up_Time 0x0027 100 100 001 Pre-fail Always - 8718

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 143

5 Reallocated_Sector_Ct 0x0033 100 100 050 Pre-fail Always - 0

7 Seek_Error_Rate 0x000b 100 100 050 Pre-fail Always - 0

8 Seek_Time_Performance 0x0005 100 100 050 Pre-fail Offline - 0

9 Power_On_Hours 0x0032 001 001 000 Old_age Always - 66382

10 Spin_Retry_Count 0x0033 102 100 030 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 143

191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 738

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 94

193 Load_Cycle_Count 0x0032 100 100 000 Old_age Always - 273

194 Temperature_Celsius 0x0022 100 100 000 Old_age Always - 38 (Min/Max 20/58)

196 Reallocated_Event_Count 0x0032 100 100 000 Old_age Always - 0

197 Current_Pending_Sector 0x0032 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

220 Disk_Shift 0x0002 100 100 000 Old_age Always - 0

222 Loaded_Hours 0x0032 001 001 000 Old_age Always - 66364

223 Load_Retry_Count 0x0032 100 100 000 Old_age Always - 0

224 Load_Friction 0x0022 100 100 000 Old_age Always - 0

226 Load-in_Time 0x0026 100 100 000 Old_age Always - 580

240 Head_Flying_Hours 0x0001 100 100 001 Pre-fail Offline - 0

SMART Error Log Version: 1

No Errors Logged

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Extended offline Interrupted (host reset) 90% 65535 -

# 2 Extended offline Interrupted (host reset) 80% 65535 -

# 3 Short offline Completed without error 00% 65535 -

SMART Selective self-test log data structure revision number 1

SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS

1 0 0 Not_testing

2 0 0 Not_testing

3 0 0 Not_testing

4 0 0 Not_testing

5 0 0 Not_testing

Selective self-test flags (0x0):

After scanning selected spans, do NOT read-scan remainder of disk.

If Selective self-test is pending on power-up, resume after 0 minute delay.

/dev/ada4

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p9 amd64] (local build)

Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF INFORMATION SECTION ===

Device Model: Micron_M600_MTFDDAY256MBF

Serial Number: 16121385A4FE

LU WWN Device Id: 5 00a075 11385a4fe

Firmware Version: MU05

User Capacity: 256,060,514,304 bytes [256 GB]

Sector Sizes: 512 bytes logical, 4096 bytes physical

Rotation Rate: Solid State Device

Form Factor: < 1.8 inches

TRIM Command: Available, deterministic, zeroed

Device is: Not in smartctl database [for details use: -P showall]

ATA Version is: ACS-3 T13/2161-D revision 4

SATA Version is: SATA 3.2, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Sep 16 12:10:26 2024 CDT

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

=== START OF READ SMART DATA SECTION ===

SMART overall-health self-assessment test result: PASSED

General SMART Values:

Offline data collection status: (0x84) Offline data collection activity

was suspended by an interrupting command from host.

Auto Offline Data Collection: Enabled.

Self-test execution status: ( 0) The previous self-test routine completed

without error or no self-test has ever

been run.

Total time to complete Offline

data collection: ( 23) seconds.

Offline data collection

capabilities: (0x7b) SMART execute Offline immediate.

Auto Offline data collection on/off support.

Suspend Offline collection upon new

command.

Offline surface scan supported.

Self-test supported.

Conveyance Self-test supported.