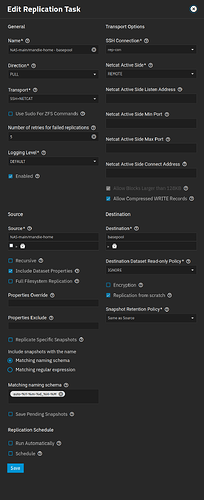

I try to replicate a dataset having a zvol from my TrueNas Core system to my TrueNas Scale system. Both on latest software versions.

My first idea was to pull the data from core towards Scale … I did not manage.

Then I did try to push the data from Core towards Scale which seems to be easier.

However also that is not working (at least not in my case).

I defined ^the same^ user on core and on scale. The user has a pubic key and secret key on core and is identified with its public key on scale.

On both systems the user has enough authorizations. On scale in fact every thing I could imagine apart from being root him self.

Never the less I get the error messages as shown below. I tried multiple things but always at the end the message is ^cannot unmount^ … ???

The destination ^mnt/Olifant/BackUp-Panda-VMs/Graylog/GrayLog(zvol>^ and even making the involved user owner of that dataset dit not help ![]()

Louis

[2024/12/02 20:10:35] INFO [Thread-88] [zettarepl.paramiko.replication_task__task_4] Connected (version 2.0, client OpenSSH_9.2p1)

[2024/12/02 20:10:35] INFO [Thread-88] [zettarepl.paramiko.replication_task__task_4] Authentication (publickey) successful!

[2024/12/02 20:10:36] INFO [replication_task__task_4] [zettarepl.replication.pre_retention] Pre-retention destroying snapshots:

[2024/12/02 20:10:36] INFO [replication_task__task_4] [zettarepl.replication.run] For replication task ‘task_4’: doing push from ‘SamsungSSD/GrayLog’ to ‘Olifant/BackUp-Panda-VMs/GrayLog’ of snapshot=‘daily-2024-11-19_00-00’ incremental_base=None receive_resume_token=None encryption=False

[2024/12/02 20:10:36] INFO [replication_task__task_4] [zettarepl.paramiko.replication_task__task_4.sftp] [chan 6] Opened sftp connection (server version 3)

[2024/12/02 20:10:36] INFO [replication_task__task_4] [zettarepl.transport.ssh_netcat] Automatically chose connect address ‘192.168.18.32’

[2024/12/02 20:10:36] ERROR [replication_task__task_4] [zettarepl.replication.run] For task ‘task_4’ unhandled replication error SshNetcatExecException(None, ExecException(1, “cannot unmount ‘/mnt/Olifant/BackUp-Panda-VMs/GrayLog’: permission denied\n”))

Traceback (most recent call last):

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 181, in run_replication_tasks

retry_stuck_replication(

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/stuck.py”, line 18, in retry_stuck_replication

return func()

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 182, in

lambda: run_replication_task_part(replication_task, source_dataset, src_context, dst_context,

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 279, in run_replication_task_part

run_replication_steps(step_templates, observer)

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 637, in run_replication_steps

replicate_snapshots(step_template, incremental_base, snapshots, encryption, observer)

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 720, in replicate_snapshots

run_replication_step(step, observer)

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/run.py”, line 797, in run_replication_step

ReplicationProcessRunner(process, monitor).run()

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/process_runner.py”, line 33, in run

raise self.process_exception

File “/usr/local/lib/python3.9/site-packages/zettarepl/replication/process_runner.py”, line 37, in _wait_process

self.replication_process.wait()

File “/usr/local/lib/python3.9/site-packages/zettarepl/transport/ssh_netcat.py”, line 212, in wait

raise SshNetcatExecException(None, self.listen_exec_error)

zettarepl.transport.ssh_netcat.SshNetcatExecException: Active side: cannot unmount ‘/mnt/Olifant/BackUp-Panda-VMs/GrayLog’: permission denied