Might be that it skipped the import-try this time yeah.

Hey sorry for the long time I’ve been really busy. I have just fresh installed on my main PC and have got some errors on import.

Error

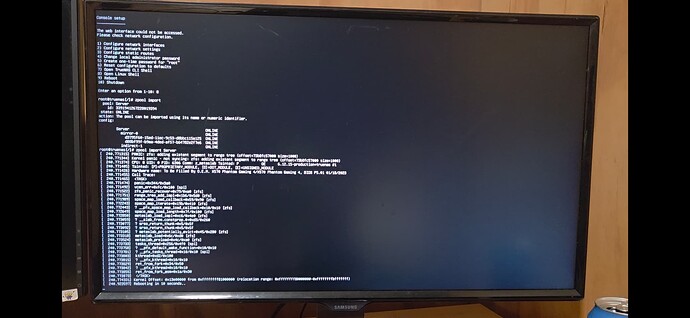

Console setup

The wb Interface could not be @accessed.

Please check network conf igurat lon.

ARATAGRS

Cont igure network sett ings

Conf isure network interfaces

Conf igure stat ic routes

Change local administrator passuord

Create one-t ime password For "root"

Reset conf. Igurat 1on to defaults

Open TrueNAS

Open Linux ShelI Shell

9) Reboot

10) Shutdoun

Enter oin opt lon from 1-10: 8

rootetruenas[

pool: Server zpool mport

State: 391941265728019394

1d:

act ion: OLINE

conf 1g: The pool can be Imported using lts name or numer lc dent lf ler.

Server

mirror-g

ONLINE

d3795f69-15ed-11ec-Sc53-d8bbc1159125 ONLINE

ONLINE

d86bf99f-baa-4ded-af57-b64782e2f7e6 ONLINE

indlrect-1

rootetruenasi]a zpol mport Server

ONLINE

240.771315] PANIC: zis: adding existent serment to existent segment to rao" treg

240. 771344] Kernel panIc not sucing: 2is: edding range tre (offsat-79b8fc57000 slze-1000)

240.771374] CPU: UID: PID: 6306 Com: 2.metas lob Talinted: -UISTORED JOULE 6.12.

slz=10

240.771405] Tainted: (P]-PROPRIETARY_MOOULE,

uare name: TO Be Fllled By O.E.M. X570

#1

240.771426]

240.771455] TASK

Gaming 47x510 rrantom oning 4, B10$ Ps.01 01/13/2023

240.771465] panic+0x344/0x3a0

240.771474

240.771492 vcun_err+exfc/0x10 [spl]

240.771522 zfs_panic_recovertex73/exa [zfs]

240,771751] range_tree_add_ imo l+@x1b6/0x5de Izfs]

240.771989 space map_ load_ cal lback-ex59/ex90 Lzf ]

240 772243j space_ ap_ lteratesox19b/oxd1e [zfs]

240.772443)

_pfx_space mop_ load_cal lback.+ex18/0x19 [zfs]

240 772649 space map_lo8d_length+ex7f 70x100 (IZfs]

240.772850] metaslab load_impl+@xc6r0x4e0 [zfs]

240.773059)

_slab_free.constprop.0+0xd3/0x269

240.773071]

srso_eturn_thunk+0x5/0xSf

240.773692] srso_return_thunk:0x5/0x5f

240.773105/1 metaslab_108d+0x6c/0xde (zfs]

240.773318 7 aeteslob-potent iol ly_evictson45/04280 Izfs]

240.773524] metas] ob_preload+ex4C/0xso [zfs]

240,773733] taskq_thread+0x256/0x4f0 (spl]

240.773758]

_fx_default_ wake-

240.773781]

246.773803] kthreadsoxd2/0x100

aska_thread+0x10/0x10

240.773815] ret_ from ork+0x34/6x50

_ofx_kthreodrox10/0x10

240.773829]

240.773842]

pfx thread+0x10/0x10

240. 779855] ral-fron.fark aste1e/630

240.773873]</TASK)

240,923597j Reboot ing in 1e ox130000 from oxffffff481000 (relocat lon range: enfffff40000-0nfff"ffbffff)

20. 77g31 Kemnel offset: seconds.

okay, so a kernel panic as soon as you try to import. never seen that before.

Maybe @HoneyBadger , @Arwen or @etorix could help here but I am out of my depth… sorry.

No worries thanks for the help hopefully someone else has some ideas! I forgot to mention as well just before this happened I imported the pool successfully via the shell in the truenas live install media. Very weird.

This looks like a nasty bug when device removal (resulting in an indirect vdev) is used in the presence of active block-cloning.

A patch was made into OpenZFS, but it only prevents future crashes. If you already tripped the bug, then the patch might not be enough to get your pool back online.

Here is another thread for reference.

EDIT: If you’re able to import it into an Ubuntu live session, you can share additional information about the pool.

zpool get all Server

zpool history Server | grep "remove\|detach"

It’s possible that this is a different bug and might only relate to a corrupt or failing boot device that TrueNAS resides on.

Yes, I agree with others that you may have pool corruption. How that happened, I don’t know.

So, where do you go from here?

You can test importing the pool from an earlier transaction using:

zpool import -Fn Server

If that seems to work, you can try to import read only. Be prepared to copy the data off to another location. (Or you can risk it without the read only option.)

zpool import -F -o readonly=on Server

If neither of those seems to work, then you can go more extreme. But, this is really beyond normal, see manual before using without the “-n” option. Plus, this can take time, depending on computer speed, amount of storage used and speed of storage.

zpool import -FXn Server

Good luck

If you check my post history I did have to remove a failing drive about 14 months ago which is revealed in the history command.

See below Results for requested commands:

Summary

root@ubuntu:/home/ubuntu# zpool get all Server

NAME PROPERTY VALUE SOURCE

Server size 10.9T -

Server capacity 77% -

Server altroot - default

Server health ONLINE -

Server guid 3391941267228019394 -

Server version - default

Server bootfs - default

Server delegation on default

Server autoreplace off default

Server cachefile - default

Server failmode continue local

Server listsnapshots off default

Server autoexpand on local

Server dedupratio 1.00x -

Server free 2.49T -

Server allocated 8.41T -

Server readonly off -

Server ashift 12 local

Server comment - default

Server expandsize - -

Server freeing 0 -

Server fragmentation 27% -

Server leaked 0 -

Server multihost off default

Server checkpoint - -

Server load_guid 14270550846428594687 -

Server autotrim off default

Server compatibility off default

Server bcloneused 75.2G -

Server bclonesaved 75.2G -

Server bcloneratio 2.00x -

Server feature@async_destroy enabled local

Server feature@empty_bpobj active local

Server feature@lz4_compress active local

Server feature@multi_vdev_crash_dump enabled local

Server feature@spacemap_histogram active local

Server feature@enabled_txg active local

Server feature@hole_birth active local

Server feature@extensible_dataset active local

Server feature@embedded_data active local

Server feature@bookmarks enabled local

Server feature@filesystem_limits enabled local

Server feature@large_blocks enabled local

Server feature@large_dnode enabled local

Server feature@sha512 enabled local

Server feature@skein enabled local

Server feature@edonr enabled local

Server feature@userobj_accounting active local

Server feature@encryption enabled local

Server feature@project_quota active local

Server feature@device_removal active local

Server feature@obsolete_counts active local

Server feature@zpool_checkpoint enabled local

Server feature@spacemap_v2 active local

Server feature@allocation_classes enabled local

Server feature@resilver_defer enabled local

Server feature@bookmark_v2 enabled local

Server feature@redaction_bookmarks enabled local

Server feature@redacted_datasets enabled local

Server feature@bookmark_written enabled local

Server feature@log_spacemap active local

Server feature@livelist enabled local

Server feature@device_rebuild enabled local

Server feature@zstd_compress enabled local

Server feature@draid enabled local

Server feature@zilsaxattr active local

Server feature@head_errlog active local

Server feature@blake3 enabled local

Server feature@block_cloning active local

Server feature@vdev_zaps_v2 active local

Server unsupported@com.delphix:redaction_list_spill inactive local

Server unsupported@org.openzfs:raidz_expansion inactive local

Server unsupported@com.klarasystems:fast_dedup inactive local

Server unsupported@org.zfsonlinux:longname inactive local

Server unsupported@com.klarasystems:large_microzap inactive

Summary

zpool history Server | grep "remove\|detach"

2024-06-04.10:34:25 py-libzfs: zpool remove Server /dev/sda2

2024-06-04.21:01:05 py-libzfs: zpool remove Server /dev/sda2

2024-06-05.21:12:04 py-libzfs: zpool remove Server /dev/sda2

I will try these later, I assume I do these from Truenas as I can import the pool normally in Ubuntu? From memory I can access it readonly (the second command you listed) via Truenas when I was trying last week.

Update: After importing the pool to Ubuntu to get the requested information, it has been stuck exporting for over an hour. The import only took 5-10 seconds.

Edit: Below is excerpt from dmesg, mentioning the metaslab issues again. Where can I go from here?

Summary

[ 369.784798] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.784802] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.784805] task:z_metaslab state:D stack:0 pid:7178 tgid:7178 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.784811] Call Trace:

[ 369.784814] <TASK>

[ 369.784819] __schedule+0x2c0/0x640

[ 369.784829] schedule+0x29/0xd0

[ 369.784835] cv_wait_common+0x101/0x140 [spl]

[ 369.784852] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.784859] __cv_wait+0x15/0x30 [spl]

[ 369.784872] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.785100] metaslab_load+0x17/0xe0 [zfs]

[ 369.785316] metaslab_preload+0x48/0xa0 [zfs]

[ 369.785531] taskq_thread+0x277/0x510 [spl]

[ 369.785546] ? __pfx_default_wake_function+0x10/0x10

[ 369.785556] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.785568] kthread+0xfe/0x230

[ 369.785573] ? __pfx_kthread+0x10/0x10

[ 369.785577] ret_from_fork+0x47/0x70

[ 369.785581] ? __pfx_kthread+0x10/0x10

[ 369.785584] ret_from_fork_asm+0x1a/0x30

[ 369.785594] </TASK>

[ 369.785601] INFO: task z_metaslab:7288 blocked for more than 122 seconds.

[ 369.785605] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.785608] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.785610] task:z_metaslab state:D stack:0 pid:7288 tgid:7288 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.785615] Call Trace:

[ 369.785617] <TASK>

[ 369.785620] __schedule+0x2c0/0x640

[ 369.785627] schedule+0x29/0xd0

[ 369.785632] vcmn_err+0xe2/0x110 [spl]

[ 369.785652] zfs_panic_recover+0x75/0xa0 [zfs]

[ 369.785878] zfs_range_tree_add_impl+0x1f2/0x620 [zfs]

[ 369.786099] zfs_range_tree_add+0x11/0x20 [zfs]

[ 369.786312] space_map_load_callback+0x6b/0xb0 [zfs]

[ 369.786523] space_map_iterate+0x1bc/0x480 [zfs]

[ 369.786733] ? default_send_IPI_single_phys+0x4b/0x90

[ 369.786740] ? __pfx_space_map_load_callback+0x10/0x10 [zfs]

[ 369.786948] space_map_load_length+0x7c/0x100 [zfs]

[ 369.787154] metaslab_load_impl+0xbb/0x4e0 [zfs]

[ 369.787371] ? spl_kmem_free_impl+0x2c/0x40 [spl]

[ 369.787385] ? srso_return_thunk+0x5/0x5f

[ 369.787389] ? srso_return_thunk+0x5/0x5f

[ 369.787392] ? arc_all_memory+0xe/0x20 [zfs]

[ 369.787594] ? srso_return_thunk+0x5/0x5f

[ 369.787598] ? metaslab_potentially_evict+0x40/0x280 [zfs]

[ 369.787819] metaslab_load+0x72/0xe0 [zfs]

[ 369.788034] metaslab_preload+0x48/0xa0 [zfs]

[ 369.788248] taskq_thread+0x277/0x510 [spl]

[ 369.788263] ? __pfx_default_wake_function+0x10/0x10

[ 369.788272] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.788284] kthread+0xfe/0x230

[ 369.788288] ? __pfx_kthread+0x10/0x10

[ 369.788292] ret_from_fork+0x47/0x70

[ 369.788295] ? __pfx_kthread+0x10/0x10

[ 369.788299] ret_from_fork_asm+0x1a/0x30

[ 369.788307] </TASK>

[ 369.788310] INFO: task z_metaslab:7290 blocked for more than 122 seconds.

[ 369.788313] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.788315] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.788316] task:z_metaslab state:D stack:0 pid:7290 tgid:7290 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.788320] Call Trace:

[ 369.788322] <TASK>

[ 369.788326] __schedule+0x2c0/0x640

[ 369.788333] schedule+0x29/0xd0

[ 369.788337] cv_wait_common+0x101/0x140 [spl]

[ 369.788350] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.788356] __cv_wait+0x15/0x30 [spl]

[ 369.788368] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.788584] metaslab_load+0x17/0xe0 [zfs]

[ 369.788803] metaslab_preload+0x48/0xa0 [zfs]

[ 369.789016] taskq_thread+0x277/0x510 [spl]

[ 369.789031] ? __pfx_default_wake_function+0x10/0x10

[ 369.789040] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.789053] kthread+0xfe/0x230

[ 369.789057] ? __pfx_kthread+0x10/0x10

[ 369.789061] ret_from_fork+0x47/0x70

[ 369.789064] ? __pfx_kthread+0x10/0x10

[ 369.789067] ret_from_fork_asm+0x1a/0x30

[ 369.789076] </TASK>

[ 369.789078] INFO: task z_metaslab:7291 blocked for more than 122 seconds.

[ 369.789081] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.789084] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.789086] task:z_metaslab state:D stack:0 pid:7291 tgid:7291 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.789091] Call Trace:

[ 369.789092] <TASK>

[ 369.789096] __schedule+0x2c0/0x640

[ 369.789103] schedule+0x29/0xd0

[ 369.789107] cv_wait_common+0x101/0x140 [spl]

[ 369.789120] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.789125] __cv_wait+0x15/0x30 [spl]

[ 369.789138] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.789353] metaslab_load+0x17/0xe0 [zfs]

[ 369.789566] metaslab_preload+0x48/0xa0 [zfs]

[ 369.789786] taskq_thread+0x277/0x510 [spl]

[ 369.789801] ? __pfx_default_wake_function+0x10/0x10

[ 369.789810] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.789822] kthread+0xfe/0x230

[ 369.789826] ? __pfx_kthread+0x10/0x10

[ 369.789830] ret_from_fork+0x47/0x70

[ 369.789833] ? __pfx_kthread+0x10/0x10

[ 369.789837] ret_from_fork_asm+0x1a/0x30

[ 369.789845] </TASK>

[ 369.789847] INFO: task z_metaslab:7292 blocked for more than 122 seconds.

[ 369.789852] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.789855] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.789858] task:z_metaslab state:D stack:0 pid:7292 tgid:7292 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.789864] Call Trace:

[ 369.789866] <TASK>

[ 369.789870] __schedule+0x2c0/0x640

[ 369.789878] schedule+0x29/0xd0

[ 369.789884] cv_wait_common+0x101/0x140 [spl]

[ 369.789901] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.789906] __cv_wait+0x15/0x30 [spl]

[ 369.789919] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.790134] metaslab_load+0x17/0xe0 [zfs]

[ 369.790348] metaslab_preload+0x48/0xa0 [zfs]

[ 369.790562] taskq_thread+0x277/0x510 [spl]

[ 369.790577] ? __pfx_default_wake_function+0x10/0x10

[ 369.790586] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.790598] kthread+0xfe/0x230

[ 369.790602] ? __pfx_kthread+0x10/0x10

[ 369.790606] ret_from_fork+0x47/0x70

[ 369.790609] ? __pfx_kthread+0x10/0x10

[ 369.790613] ret_from_fork_asm+0x1a/0x30

[ 369.790621] </TASK>

[ 369.790623] INFO: task z_metaslab:7293 blocked for more than 122 seconds.

[ 369.790626] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.790629] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.790632] task:z_metaslab state:D stack:0 pid:7293 tgid:7293 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.790636] Call Trace:

[ 369.790638] <TASK>

[ 369.790641] __schedule+0x2c0/0x640

[ 369.790648] schedule+0x29/0xd0

[ 369.790652] cv_wait_common+0x101/0x140 [spl]

[ 369.790665] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.790671] __cv_wait+0x15/0x30 [spl]

[ 369.790683] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.790903] metaslab_load+0x17/0xe0 [zfs]

[ 369.791126] metaslab_preload+0x48/0xa0 [zfs]

[ 369.791340] taskq_thread+0x277/0x510 [spl]

[ 369.791355] ? __pfx_default_wake_function+0x10/0x10

[ 369.791364] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.791376] kthread+0xfe/0x230

[ 369.791380] ? __pfx_kthread+0x10/0x10

[ 369.791384] ret_from_fork+0x47/0x70

[ 369.791387] ? __pfx_kthread+0x10/0x10

[ 369.791391] ret_from_fork_asm+0x1a/0x30

[ 369.791399] </TASK>

[ 369.791401] INFO: task z_metaslab:7294 blocked for more than 122 seconds.

[ 369.791404] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.791407] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.791409] task:z_metaslab state:D stack:0 pid:7294 tgid:7294 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.791414] Call Trace:

[ 369.791416] <TASK>

[ 369.791419] __schedule+0x2c0/0x640

[ 369.791426] schedule+0x29/0xd0

[ 369.791430] cv_wait_common+0x101/0x140 [spl]

[ 369.791443] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.791448] __cv_wait+0x15/0x30 [spl]

[ 369.791460] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.791676] metaslab_load+0x17/0xe0 [zfs]

[ 369.791895] metaslab_preload+0x48/0xa0 [zfs]

[ 369.792120] taskq_thread+0x277/0x510 [spl]

[ 369.792135] ? __pfx_default_wake_function+0x10/0x10

[ 369.792144] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.792156] kthread+0xfe/0x230

[ 369.792160] ? __pfx_kthread+0x10/0x10

[ 369.792164] ret_from_fork+0x47/0x70

[ 369.792167] ? __pfx_kthread+0x10/0x10

[ 369.792171] ret_from_fork_asm+0x1a/0x30

[ 369.792179] </TASK>

[ 369.792181] INFO: task z_metaslab:7295 blocked for more than 122 seconds.

[ 369.792185] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 369.792187] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 369.792190] task:z_metaslab state:D stack:0 pid:7295 tgid:7295 ppid:2 task_flags:0x288040 flags:0x00004000

[ 369.792194] Call Trace:

[ 369.792196] <TASK>

[ 369.792199] __schedule+0x2c0/0x640

[ 369.792206] schedule+0x29/0xd0

[ 369.792210] cv_wait_common+0x101/0x140 [spl]

[ 369.792223] ? __pfx_autoremove_wake_function+0x10/0x10

[ 369.792229] __cv_wait+0x15/0x30 [spl]

[ 369.792241] metaslab_load_wait+0x28/0x50 [zfs]

[ 369.792458] metaslab_load+0x17/0xe0 [zfs]

[ 369.792672] metaslab_preload+0x48/0xa0 [zfs]

[ 369.792890] taskq_thread+0x277/0x510 [spl]

[ 369.792905] ? __pfx_default_wake_function+0x10/0x10

[ 369.792914] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 369.792927] kthread+0xfe/0x230

[ 369.792931] ? __pfx_kthread+0x10/0x10

[ 369.792935] ret_from_fork+0x47/0x70

[ 369.792938] ? __pfx_kthread+0x10/0x10

[ 369.792941] ret_from_fork_asm+0x1a/0x30

[ 369.792950] </TASK>

[ 492.661056] INFO: task z_metaslab:7178 blocked for more than 245 seconds.

[ 492.661065] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 492.661069] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 492.661071] task:z_metaslab state:D stack:0 pid:7178 tgid:7178 ppid:2 task_flags:0x288040 flags:0x00004000

[ 492.661077] Call Trace:

[ 492.661080] <TASK>

[ 492.661085] __schedule+0x2c0/0x640

[ 492.661095] schedule+0x29/0xd0

[ 492.661100] cv_wait_common+0x101/0x140 [spl]

[ 492.661114] ? __pfx_autoremove_wake_function+0x10/0x10

[ 492.661121] __cv_wait+0x15/0x30 [spl]

[ 492.661134] metaslab_load_wait+0x28/0x50 [zfs]

[ 492.661361] metaslab_load+0x17/0xe0 [zfs]

[ 492.661577] metaslab_preload+0x48/0xa0 [zfs]

[ 492.661792] taskq_thread+0x277/0x510 [spl]

[ 492.661807] ? __pfx_default_wake_function+0x10/0x10

[ 492.661817] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 492.661829] kthread+0xfe/0x230

[ 492.661834] ? __pfx_kthread+0x10/0x10

[ 492.661837] ret_from_fork+0x47/0x70

[ 492.661841] ? __pfx_kthread+0x10/0x10

[ 492.661845] ret_from_fork_asm+0x1a/0x30

[ 492.661854] </TASK>

[ 492.661860] INFO: task z_metaslab:7288 blocked for more than 245 seconds.

[ 492.661864] Tainted: P O 6.14.0-27-generic #27~24.04.1-Ubuntu

[ 492.661867] "echo 0 > /proc/sys/kernel/hung_task_timeout_secs" disables this message.

[ 492.661869] task:z_metaslab state:D stack:0 pid:7288 tgid:7288 ppid:2 task_flags:0x288040 flags:0x00004000

[ 492.661874] Call Trace:

[ 492.661876] <TASK>

[ 492.661879] __schedule+0x2c0/0x640

[ 492.661887] schedule+0x29/0xd0

[ 492.661891] vcmn_err+0xe2/0x110 [spl]

[ 492.661911] zfs_panic_recover+0x75/0xa0 [zfs]

[ 492.662136] zfs_range_tree_add_impl+0x1f2/0x620 [zfs]

[ 492.662368] zfs_range_tree_add+0x11/0x20 [zfs]

[ 492.662582] space_map_load_callback+0x6b/0xb0 [zfs]

[ 492.662793] space_map_iterate+0x1bc/0x480 [zfs]

[ 492.663004] ? default_send_IPI_single_phys+0x4b/0x90

[ 492.663011] ? __pfx_space_map_load_callback+0x10/0x10 [zfs]

[ 492.663223] space_map_load_length+0x7c/0x100 [zfs]

[ 492.663429] metaslab_load_impl+0xbb/0x4e0 [zfs]

[ 492.663646] ? spl_kmem_free_impl+0x2c/0x40 [spl]

[ 492.663660] ? srso_return_thunk+0x5/0x5f

[ 492.663664] ? srso_return_thunk+0x5/0x5f

[ 492.663667] ? arc_all_memory+0xe/0x20 [zfs]

[ 492.663869] ? srso_return_thunk+0x5/0x5f

[ 492.663872] ? metaslab_potentially_evict+0x40/0x280 [zfs]

[ 492.664094] metaslab_load+0x72/0xe0 [zfs]

[ 492.664313] metaslab_preload+0x48/0xa0 [zfs]

[ 492.664527] taskq_thread+0x277/0x510 [spl]

[ 492.664542] ? __pfx_default_wake_function+0x10/0x10

[ 492.664551] ? __pfx_taskq_thread+0x10/0x10 [spl]

[ 492.664564] kthread+0xfe/0x230

[ 492.664568] ? __pfx_kthread+0x10/0x10

[ 492.664572] ret_from_fork+0x47/0x70

[ 492.664575] ? __pfx_kthread+0x10/0x10

[ 492.664578] ret_from_fork_asm+0x1a/0x30

[ 492.664586] </TASK>

[ 492.664588] Future hung task reports are suppressed, see sysctl kernel.hung_task_warnings

[ 830.694695] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 830.694706] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 830.934602] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 830.934613] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 830.934656] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 830.934662] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 833.966923] Buffer I/O error on dev dm-1, logical block 0, async page read

[ 833.966935] Buffer I/O error on dev dm-1, logical block 0, async page read

When you imported it into Ubuntu, did you use readonly or import it normally?

I’m starting to think this is a manifestation of the bug triggered by indirect vdev + block-cloning.

It appears at one point you used block-cloning on a 75GB file. Do you remember if this was before or after you removed a vdev? (Not to be confused with a disk removal. It appears you removed an entire stripe or mirror vdev at one point.)

Import normally, no read only. I’m not sure what block cloning is, can it be triggered on it’s own? I did remove a drive from a stripe last year after it started failing.

Yes. It is done automatically when you “copy” a file if the software supports it.

You removed an entire stripe (single disk) vdev?

Can you import the pool as readonly in Ubuntu and check the following?

zpool history Server | head -n 2

zpool history Server | grep feature

zpool history Server | grep "remove\|detach"

My stripe was one 12tb disk + one 4tb disk. The 4tb failed so used truenas GUI and removed it from pool, then ordered another 12tb drive and created a mirror from same pool.

My pool still seems stuck trying to export since last night. It’s been about 15 hours.

root@ubuntu:/home/ubuntu# zpool history Server | head -n 2

History for 'Server':

2021-09-15.06:26:14 zpool create -o feature@lz4_compress=enabled -o altroot=/mnt -o cachefile=/data/zfs/zpool.cache -o failmode=continue -o autoexpand=on -o ashift12 -o feature@async_destroy=enabled -o feature@empty_bpobj=enabled -o feature@multi_vdev_crash_dump=enabled -o feature@spacemap_histogram=enabled -o feature@enabled_txg=enabled -o feature@hole_birth=enabled -o feature@extensible_dataset=enabled -o feature@embedded_data=enabled -o feature@bookmarks=enabled -o feature@filesystem_limits=enabled -o feature@large_blocks=enabled -o feature@large_dnode=enabled -o feature@sha512=enabled -o feature@skein=enabled -o feature@userobj_accounting=enabled -o feature@encryption=enabled -o feature@project_quota=enabled -o feature@device_removal=enabled -o feature@obsolete_counts=enabled -o feature@zpool_checkpoint=enabled -o feature@spacemap_v2=enabled -o feature@allocation_classes=enabled -o feature@resilver_defer=enabled -o feature@bookmark_v2=enabled -o feature@redaction_bookmarks=enabled -o feature@redacted_datasets=enabled -o feature@bookmark_written=enabled -o feature@log_spacemap=enabled -o feature@livelist=enabled -o feature@device_rebuild=enabled -o feature@zstd_compress=enabled -O compression=lz4 -O aclinherit=passthrough -O mountpoint=/Server -O aclmode=passthrough Server /dev/gptid/d3795f60-15ed-11ec-9c53-d8bbc115a125

root@ubuntu:/home/ubuntu# zpool history Server | grep feature

2021-09-15.06:26:14 zpool create -o feature@lz4_compress=enabled -o altroot=/mnt -o cachefile=/data/zfs/zpool.cache -o failmode=continue -o autoexpand=on -o ashift12 -o feature@async_destroy=enabled -o feature@empty_bpobj=enabled -o feature@multi_vdev_crash_dump=enabled -o feature@spacemap_histogram=enabled -o feature@enabled_txg=enabled -o feature@hole_birth=enabled -o feature@extensible_dataset=enabled -o feature@embedded_data=enabled -o feature@bookmarks=enabled -o feature@filesystem_limits=enabled -o feature@large_blocks=enabled -o feature@large_dnode=enabled -o feature@sha512=enabled -o feature@skein=enabled -o feature@userobj_accounting=enabled -o feature@encryption=enabled -o feature@project_quota=enabled -o feature@device_removal=enabled -o feature@obsolete_counts=enabled -o feature@zpool_checkpoint=enabled -o feature@spacemap_v2=enabled -o feature@allocation_classes=enabled -o feature@resilver_defer=enabled -o feature@bookmark_v2=enabled -o feature@redaction_bookmarks=enabled -o feature@redacted_datasets=enabled -o feature@bookmark_written=enabled -o feature@log_spacemap=enabled -o feature@livelist=enabled -o feature@device_rebuild=enabled -o feature@zstd_compress=enabled -O compression=lz4 -O aclinherit=passthrough -O mountpoint=/Server -O aclmode=passthrough Server /dev/gptid/d3795f60-15ed-11ec-9c53-d8bbc115a125

2024-04-03.07:55:50 py-libzfs: zpool set feature@edonr=enabled Server

2024-04-03.07:55:50 py-libzfs: zpool set feature@draid=enabled Server

2024-04-03.07:55:50 py-libzfs: zpool set feature@zilsaxattr=enabled Server

2024-04-03.07:55:50 py-libzfs: zpool set feature@head_errlog=enabled Server

2024-04-03.07:55:51 py-libzfs: zpool set feature@blake3=enabled Server

2024-04-03.07:55:51 py-libzfs: zpool set feature@block_cloning=enabled Server

2024-04-03.07:55:51 py-libzfs: zpool set feature@vdev_zaps_v2=enabled Server

2025-01-12.03:53:47 py-libzfs: zpool set feature@redaction_list_spill=enabled Server

2025-01-12.03:53:48 py-libzfs: zpool set feature@raidz_expansion=enabled Server

2025-01-12.03:53:48 py-libzfs: zpool set feature@fast_dedup=enabled Server

root@ubuntu:/home/ubuntu# zpool history Server | grep "remove\|detach"

2024-06-04.10:34:25 py-libzfs: zpool remove Server /dev/sda2

2024-06-04.21:01:05 py-libzfs: zpool remove Server /dev/sda2

2024-06-05.21:12:04 py-libzfs: zpool remove Server /dev/sda2

April 3, 2024 is the earliest that block-cloning might have been used.

Do you remember a when you did this? If it was after April 3, 2024, then it’s possible that you might have tripped the ZFS bug.[1]

Can you use lsof to check if any processes are using paths within the mountpoint of the pool?

This might help:

lsof -d /Server

Replace /Server with the appropriate path if you imported it with a different “altroot”.

Your kernel panic doesn’t look like the others though. ↩︎

I removed that drive June 2024 so after April. I will check for processes when I get home today.

Since I can mount the drive anyway is it just going to be easier to buy a new 12tb, make a new pool and clone my data across in Ubuntu? Mount the new pool in truenas, then make a mirror with one drive and add one as spare?

I’ve been trying to avoid buying a new drive but I’m not really sure where to go from here if it can’t be fixed.

If you can import the pool in Ubuntu without any errors, even if you need to use readonly, then I would replicate everything to a new pool. I believe you tripped over the bug that was mentioned.

If you create the new pool with the latest version of TrueNAS CE, then you don’t need to worry about the bug anymore, since it’s been patched in upstream ZFS.

Alright so if I follow this process:

- New drive/new pool made on Truenas.

- Boot Ubuntu with old pool and new pool. Mount both.

- Replicate old files to new pool.

- Export pools, reboot in to truenas then mount new pool.

- If all works fine, wipe old pool then use old drives as mirror/spare.

That sounds right.

You “import” pools, not “mount”. To be precise.

You won’t need to mount any dataset filesystems since you’ll be doing a full replication of all datasets from one pool to another. You can use the -N flag in the zpool import command to prevent it from automatically mounting any datasets.

At least you’ll have a redundant pool during this process and you’ll be able to “extend” the new pool to go from a stripe to a mirror vdev.

Yeah sweet, I already have the 2 X 12tb in a mirror though. I think I’m gonna buy 2 X 18tb while I’m ordering, may as well extend my capacity and have it all mirrored, already over 80% full.

Have you guys ever used factory recertified drives or not a good idea?

I’m using 6 manufacturer recertified drives for my NAS and backup NAS.

Make sure they are manufacturer recertified and not just “refurbished”. Seagate and Western Digital will put their name behind the product.