Been racking my brains for a while trying to diagnose this. More after my setup details.

-Latest versions of stable TrueNAS Scale, I think I started with a late v24, and now 25.04.2

-ASRock B550 Phantom Gaming 4 (I know, I know… “gaming”)

-Ryzen 5500GT (5600G in disguise)

-Seasonic G12 GC-550 (550W)

-128GB DDR4 (4x32GB Patriot Viper Steel 64GB DDR4 3600MHz CL18 1.35v)

-TPLink TX401 10GbE (both on NAS and W10 PC) - I think it’s AQC107 based.

-4x Toshiba MG09ACATE 18TB drives in RAIDZ1 for the dataset

-Micron 1100 - 512GB SSD for the OS

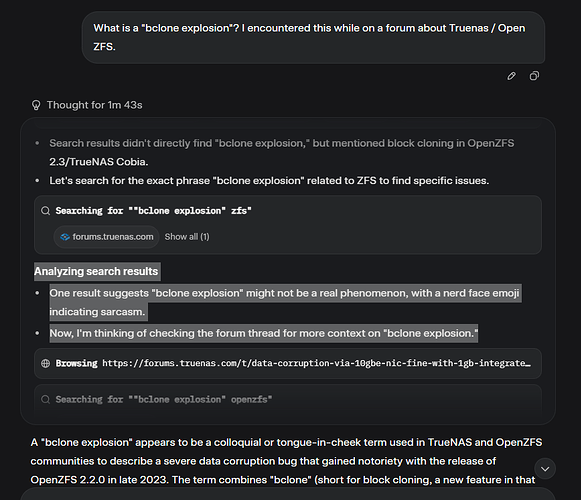

So the story, as tl’dr I can manage it, goes like this.

-First noticed checksum erros on scrubs. Random, 2-4 per drive, never the same drives. Could be 1, could be 3 drives. Never all. Drives pass all SMART tests and were working fine in my PC before I built the NAS.

After clearing, scrubs reported no errors until I transfered new data to the NAS.

-After a while, when editing video footage I’ve been noticing missing frames(even after scrubs).

Nothing “serious” because luckily I work with Nikon RAW NEV files which are basically many raw files in a sequence, so only the problematic frames were affected.

-Since then I was thinking it might be the RAM that corrupts files? Because it was difficult to connect a monitor to the NAS to check with MemTest(yeah, lazy) I started testing the 10GbE connection.

-Tried everything I, and my friend ChatGPT, could come up with:

![]() MTU/Jumbo frame mismatch between PC NIC and TrueNas NIC (TrueNas was on 9000, PC was on 9014). Best speeds even with them mismatched, saturating the connection (1.2GB/s) and drives (~650-700MB).

MTU/Jumbo frame mismatch between PC NIC and TrueNas NIC (TrueNas was on 9000, PC was on 9014). Best speeds even with them mismatched, saturating the connection (1.2GB/s) and drives (~650-700MB).

*corruption still there, copy/verify with teracopy or h2testw

![]() Defaulting to MTU1500 / Jumbo Frames disabled. Tanked speeds, ~300mb read, 800MB write in synthetics which usually got the 1.2GB/s speeds (via ARC, of course)

Defaulting to MTU1500 / Jumbo Frames disabled. Tanked speeds, ~300mb read, 800MB write in synthetics which usually got the 1.2GB/s speeds (via ARC, of course)

*corruption

![]() As ChatGPT said some Aquantia drivers are problematic and if I see this message, I should try disabling GRO/LRO. (I was, and I did)

As ChatGPT said some Aquantia drivers are problematic and if I see this message, I should try disabling GRO/LRO. (I was, and I did)

“Driver has suspect GRO implementation, TCP performance may be compromised”

*corruption

![]() When testing with h2wtest i even ran the verification twice, to verify if it’s corrupting files at read as well. Errors returned the same, so the transmission from the NAS is ok through the TX401. The files were badly written.

When testing with h2wtest i even ran the verification twice, to verify if it’s corrupting files at read as well. Errors returned the same, so the transmission from the NAS is ok through the TX401. The files were badly written.

Didn’t matter, MTU1500, MTU9014, GRO/LRO on or off, there was corruption

![]() My fantastic brain briliantly remembered that the motherboard has a 1GbE Realtek NIC that was connected to the internet via router for apps/updates. So it’s worth a try to transfer through it(and router) instead?

My fantastic brain briliantly remembered that the motherboard has a 1GbE Realtek NIC that was connected to the internet via router for apps/updates. So it’s worth a try to transfer through it(and router) instead?

** ![]() It was, both Teracopy and a H2Testw returned absolutely no errors, although I can count my new grey hairs from some simple 100GB tests.**

It was, both Teracopy and a H2Testw returned absolutely no errors, although I can count my new grey hairs from some simple 100GB tests.**

![]() What I’m thinking now is that I can rule out RAM/CPU/HDDs after these tests and put the blame on the TX401?

What I’m thinking now is that I can rule out RAM/CPU/HDDs after these tests and put the blame on the TX401?

![]() Can I try any other settings for the TX401, or should I just swap it with an X550-t2 ?

Can I try any other settings for the TX401, or should I just swap it with an X550-t2 ?

![]() Guessing no, but do I also need to swap theTX401 on the PC side as well?

Guessing no, but do I also need to swap theTX401 on the PC side as well?

Any other suggestions are really appreciated, maybe I can “salvage” the TX401?

Thanks for joining me in my sanity decreasing journey.